1. ENTANDO 5.3.0

1.1. ENTANDO RELEASE NOTES

1.1.1. ENTANDO 5.3.0 RELEASE NOTES

DOCUMENTATION AND DEPLOYMENT

-

New OpenShift templates and documentation including a new getting started experience to deploy Entando applications on OpenShift

-

New examples, documentation, and templates to deploy Entando applications and AWS, Azure, and Google Cloud Engine using Kubernetes

-

Added documentation and examples for deploying using an Oracle database

CONTENT MANAGEMENT

-

The Entando CMS now gives the content editor the ability to crop, flip, and generally interact with an image uploaded to the application. This includes the ability to define pre-built crop ratios that are available to image editors

-

The digital assets section of the CMS now allows for multiple images or attachments to be uploaded concurrently

-

Metadata attached to images can now be stored attached to an image and that metadata can be used to automatically populate attributes defined in content types. For example, a description metadata tag on an image can be used to populate a caption or text in the content

-

The Entando CMS now supports the manual upload of very large files via digital assets. The files are loaded in chunks using base 64

-

The Entando CMS now allows for configuration to exclude content by id and by type from versioning plugin. Generally useful when high volume content delivered by API is generating large numbers of history records.

-

For updated CMS documentation and how to use the new features see:

DIGITAL EXCHANGE

The release of Entando 5.1.0 includes the tech preview release of the Entando Digital Exchange which is a new model for distributing and installing Entando components. This release includes the ability to install and manage all of the UI elements of an Entando application. Follow our blog and YouTube channels for more to come on the Digital Exchange. For documentation and getting started see: http://developer.entando.com/#_digital_exchange

APIs AND ENDPOINTS

-

Added new PATCH API capability to merge changes to page settings endpoints and established the framework for applying a PATCH in a custom API

-

Added the refresh token flow for OAuth and implemented token refresh in the App Builder

-

Added JSON REST APIs for content models and content types

FIXES AND UPDATES

-

Resolved an issue where joinGroups weren’t updating on PUT calls

-

Resolved an issue in App Builder where in some cases the locale of the App Builder was lost on a refresh

-

Updated processing around category creation when creating a new category during the content creation workflow

-

Updated errors in the Inspinia and PAM page models included in the PAM template

-

Resolved an issue where datatype date attributes were not returned correctly in the app builder and updated data type actions in app builder

-

Resolved an issue where page model titles were not displayed correctly in some cases

-

Resolved an issue in the CMS where the action button could become replicated

-

Resolved issues related to moving page nodes in the App Builder

-

General locale and translation updates for App Builder in Italian

-

Resolved CMS image category and search issues related to category join groups

-

Updated file download flow in the CMS to correctly download files on all browsers

-

Updated scripts and builds to resolve issues in Windows

NEW CAPABILITIES IN INTEGRATION WITH RED HAT PAM

-

Added a new widget to display task details related to a selected task in Red Hat PAM. See PAM Process Task Detail widget in the app

-

Added a new widget to allow a task form to be displayed independently on a page (the modal dialog functionality is unchanged.) See PAM-Task Form by DataType

-

Added significant new configuration options to the PAM Datatable task list widget including

-

Added configurable ability to claim a task directly from the task list

-

Added configurable ability to open a new page with task details when clicking on a row in the task list

-

Added the ability to include task variables as columns in the task list Added the ability to disable the complete button in the task list

-

-

Added process diagram widget to Inspinia BPM default widgets

-

Updated case comment

CHANGES AND UPDATES IN 5.1.0 IN INTEGRATION WITH RED HAT PAM

-

Resolved an issue viewing the SVG for the process diagram based on browser updates

-

Resolved an issue where task details wouldn’t populate in some forms

-

Updated integration with PAM based on integration with additional process and task configurations in customer test cases

-

Updated task buttons to be fixed on the left hand side of task table

-

Resolved an issue rendering and selecting checkbox values in forms

-

Updated and resolved issues in form submission process for complex forms

-

Various updates to task list to support integration with additional PAM field types

-

Fixed round tripping for null values in forms and checkboxes

KNOWN ISSUES

-

There is a known issue rendering the case comments widget independently. A patch will be provided to resolve this issue.

-

On AWS and Azure there is a library missing to run the demo on Wildfly. A patch will be provided to resolve this issue.

-

The process list widget will not render against PAM in some process definitions. To be patched in 5.1.1

LINKS AND RESOURCES

-

Explore the code on GitHub: https://github.com/entando

-

See our Getting Started guide: http://developer.entando.com/#getting-started

-

See our Custom App Building guide: http://developer.entando.com/#_custom_app_building_with_entando

-

Contribute to the software: https://central.entando.com/en/community.page

1.1.3. ABSTRACT

This document contains release notes for Entando 5.0.2. See previous Entando release notes for notes on earlier versions.

1.1.4. CHANGES IN 5.0.2

Resolved an incorrect dependency version in entando-core and entando-components that reference a SNAPSHOT rather than a released library.

VERSIONING INFORMATION

Artifact |

Version |

entando-core |

5.0.2 |

entando-archetypes |

5.0.2 |

entando-ux-packages |

5.0.2 |

entando-components |

5.0.2 |

app-builder |

5.0.2 |

LINKS AND RESOURCES

-

Explore the code on GitHub: https://github.com/entando

-

See our Getting Started guide: http://developer.entando.com/#getting-started

-

See our Custom App Building guide: http://developer.entando.com/#_custom_app_building_with_entando

-

Contribute to the software: https://central.apps.entando.com/en/community.page

1.1.5. ENTANDO 5.0.1 RELEASE NOTES

CHANGES IN 5.0.1

Library and dependency updates

-

We have updated the following as part of a general review of dependencies and updates based on Sonar scans:

Dependency

Updated to

Apache taglibs

1.2.3

commons-bean-utils

1.9.2

commons-io

2.6

CXF

3.1.16

Freemarker

2.3.36-incubating

Junit

4.8.2

log4j

2.10.0

Spring

5.0.8.RELEASE

Spring Security (new)

5.0.7.RELEASE

Struts2

2.5.17

Struts2-jquery

4.0.3

Security updates

-

The dependency version updates above include changes based on Sonar OWASP dependency scans

Swagger

-

This release includes the addition of Swagger API documentation on top of the existing Entando REST endpoints

-

Entando applications can extend Entando APIs and configure endpoints to be exposed via Swagger

-

Learn more here: http://developer.entando.com/#_configuring_swagger_optional

REST API Definition

-

Resolved a bug that prevented custom package scans from exposing endpoints in Entando REST endpoints

-

Learn more about exposing REST endpoints in an Entando application: http://developer.entando.com/#_building_a_rest_api

Red Hat Process Automation Manager (PAM) plugin

-

Added a new page model to integrate and display all of the Entando case management widgets on a single page

-

Added support for new field types:

-

Check boxes

-

Radio buttons

-

List boxes

-

Text areas

-

Dates

-

-

Available to be added to projects as a Maven dependency

-

Resolved error condition when connecting to PAM 7.1 instances

-

Updated Form by Datatype widget to support additional fields and resolved issues related to rendering forms for different models

-

Resolved issues related to rendering PAM widgets and pages

Authentication and Authorization Model

-

The release in Entando 5.0.1 includes a change in authentication models from OLTU to Spring Security

-

Entando endpoints and user management are all now integrated with Spring security

Defect Resolution and General Updates

App Builder

-

Resolved issue displaying and selecting join groups

-

Resolved page title display

-

Resolved label issue in data type and data model

-

Resolved issue related to the display of groups for widget types, content, and resources

-

Resolved username and email display issue in User Management

-

Resolved issue moving nodes in page tree

-

Added the ability to save an empty user profile

-

Added warning about deleting locked Fragments

-

Updated display of file and folder size in File Browser

-

Updated validation rules for username and password to match API requirements

-

Updated validation rules for page model and page naming to match API requirements

CMS

-

Resolved issue with duplicated column names

-

Resolved issues editing content after adding a join group

-

Resolved breadcrumb display issue

-

Resolved edge condition in content preview actions

-

Resolved various issues surrounding the addition of attachments to content

-

Resolved issues in the addition and management of categories

Known Issues

Some dependencies in 5.0.1 still reference snapshot versions of components and other related products. This will be resolved in 5.0.2.

Versioning Information

Artifact |

Version |

entando-core |

5.0.1 |

entando-archetypes |

5.0.1 |

entando-ux-packages |

5.0.1 |

entando-components |

5.0.1 |

app-builder |

5.0.1 |

Links and Resources

-

Explore the code on GitHub: https://github.com/entando

-

See our Getting Started guide: http://developer.entando.com/#getting-started

-

See our Custom App Building guide: http://developer.entando.com/#_custom_app_building_with_entando

-

Contribute to the software: https://central.apps.entando.com/en/community.page

1.1.6. ENTANDO 5.0.0 RELEASE NOTES

PREFACE

These release notes highlight new features in Entando 5.0, list features that are in technical preview, and include any known issues.

GOAL OF ENTANDO 5

The goal of Entando 5.0 is to fully engage our platform with the Modern Applications revolution by designing for a cloud-based infrastructure, adapting our architecture to be more distributed, and fully supporting DevOps methodologies.

ABOUT ENTANDO 5

Entando 5 represents a fundamental update to the Entando infrastructure to support modern application development processes including: new options for clustering, cloud native deployment, out of the box support for deploying apps in OpenShift, new UI technologies, and an updated widget development process.

These release notes provide a technical introduction to the changes included in Entando 5. Check out our Installation guide, Getting Started guide, or our blog for examples and more on putting Entando 5 to work.

WHAT’S NEW WITH ENTANDO 5

-

Infrastructure/Platform

-

Hybrid hosting environment

-

Entando 5 supports deployment in both public and private cloud configurations including deployment using Docker and OpenShift

-

-

Cloud native clustering using a JSR 107 compliant cache for scaling in the cloud

-

Entando 5 introduces the ability to back an installation of the MApp Engine-engine with a JSR 107 cache for multinode high availability (HA) configuration

-

A reference implementation using Infinispan is provided in the entando-components project

-

-

Container deployment

-

Deploy directly to OpenShift using images published to the Red Hat catalog

-

Deploy using Docker images provided on DockerHub

-

Install an Entando project using provided Source to Image (S2I) Docker images

-

-

New public facing REST APIs

-

Entando 5 exposes a new set of REST APIs for interacting with App Builder assets

-

-

Decoupled application builder and micro engine for updated deployment options

-

This release decouples the application builder’s front end from the Entando engine allowing the engine and App Builder to be deployed separately. The updated application builder is a ReactJS based application that uses REST APIs and can be deployed and managed separately from the MApp Engine

-

-

Upgrade to Spring 5

-

Security updates We have performed security scans as part of the Entando 5 software release to ensure that we are using secure libraries and practicing secure coding practices within our application. We continue to use automated tools and manual security reviews to monitor and evaluate the security of the Entando platform. Notable updates include:

-

Various library version upgrades to match OWASP security scan results at time of release

-

Software updates to support SonarQube security scans and improve software security

-

The Entando core has been updated to Spring 5.0.5

-

-

-

Processes

-

Supports DevOps processes

-

First class support for deploying Entando projects on Docker and OpenShift

-

New archetypes to deploy to OpenShift and Docker using Fabric8

-

Created Docker base images for quick extension and deployment

-

-

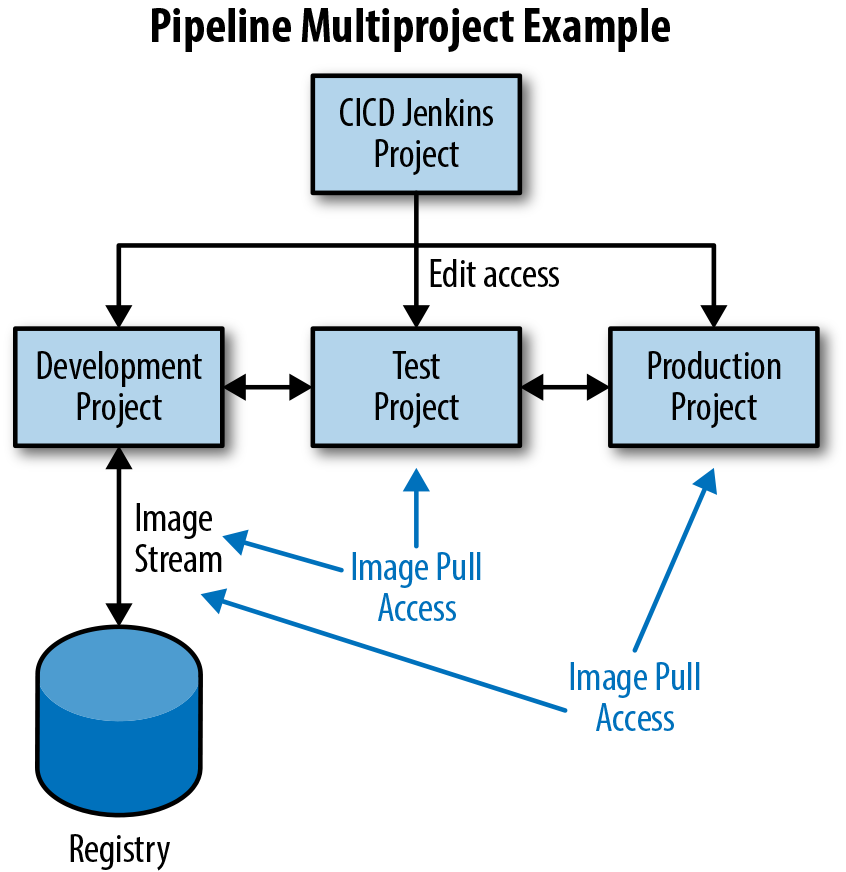

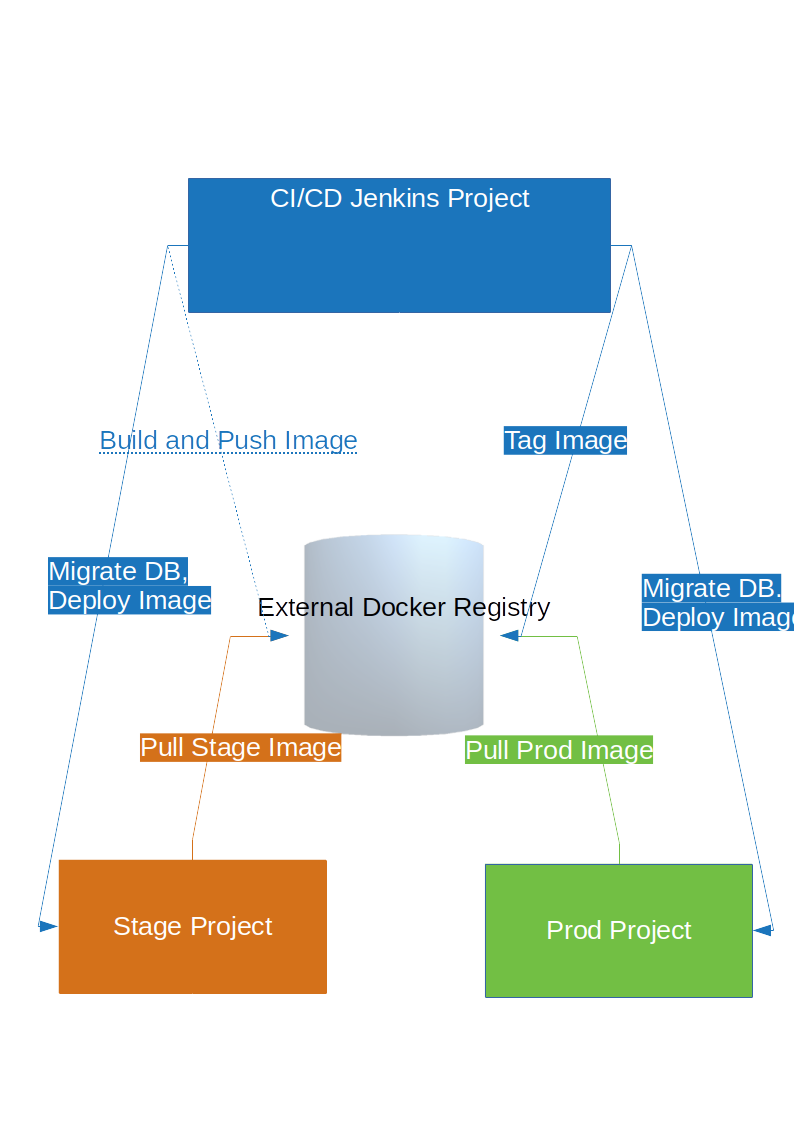

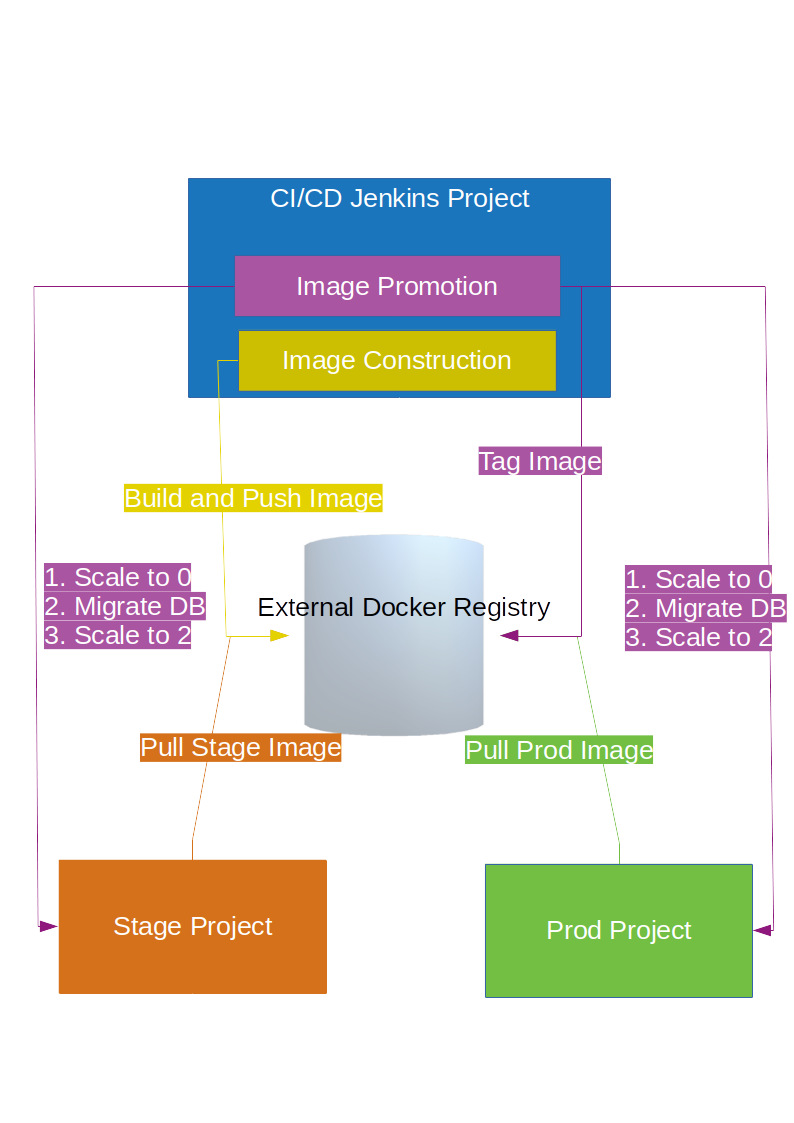

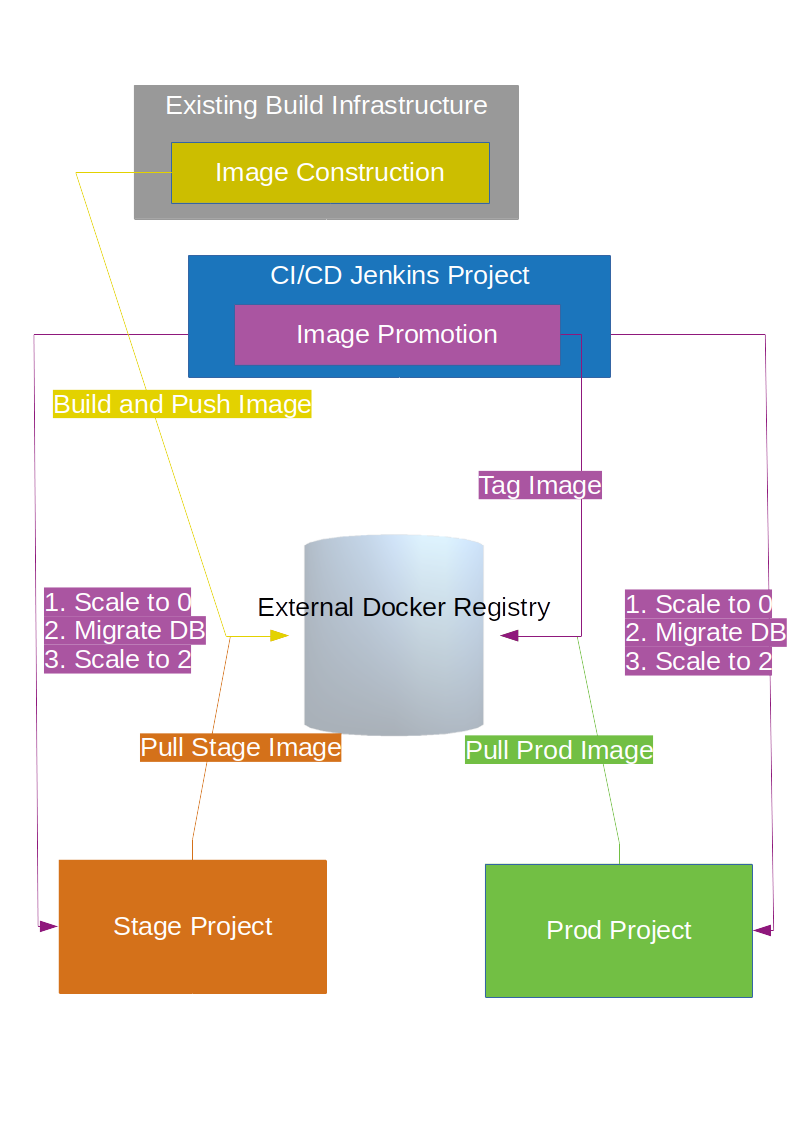

Manage full CI/CD life cycle

-

Created reference implementation of Entando Docker images in Jenkins to create, deploy, and manage an application built using Entando in a CI/CD environment

-

-

Decoupled CMS from MApp Engine

-

The CMS capability available in Entando 4.3.2 has been decoupled from the core MApp Engine implementation and will be available as a plugin to Entando 5 in a future release

-

-

New component development experience

-

The Entando Component Generator (formerly edo) has been updated to use a configuration file to more easily select different types of components to generate

-

-

KNOWN ISSUES

-

The ability to associate attributes with User Profiles is not implemented in the decoupled App Builder

-

Workaround: use the legacy application screens to add attributes to user profiles

-

Anticipated fix in first patch release

-

-

Manually editing and saving Data Types and Data Models in the decoupled App Builder has errors

-

Workaround: use the legacy application screens to edit Data Types

-

Anticipated fix in future patch release

-

-

If you move the homepage to a child of itself in the page tree it can result in browser and application stability problems

-

Anticipated fix in future patch release

-

The PAM Datatable and Task List widgets only function in the legacy App Builder

-

VERSIONING INFORMATION

| Artifact | Version |

|---|---|

entando-core |

5.0.0 |

entando-archetypes |

5.0.0 |

entando-ux-packages |

5.0.0 |

entando-vfs-manager |

5.0.0 |

app-builder |

5.0.0 |

MApp-Engine-admin |

5.0.0 |

LINKS AND RESOURCES

-

Explore the code on GitHub: https://github.com/entando

-

See our Getting Started guide: http://developer.entando.com/#getting-started

-

See our Custom App Building guide: http://developer.entando.com/#_custom_app_building_with_entando

-

Contribute to the software: https://central.entando.com/en/community.page

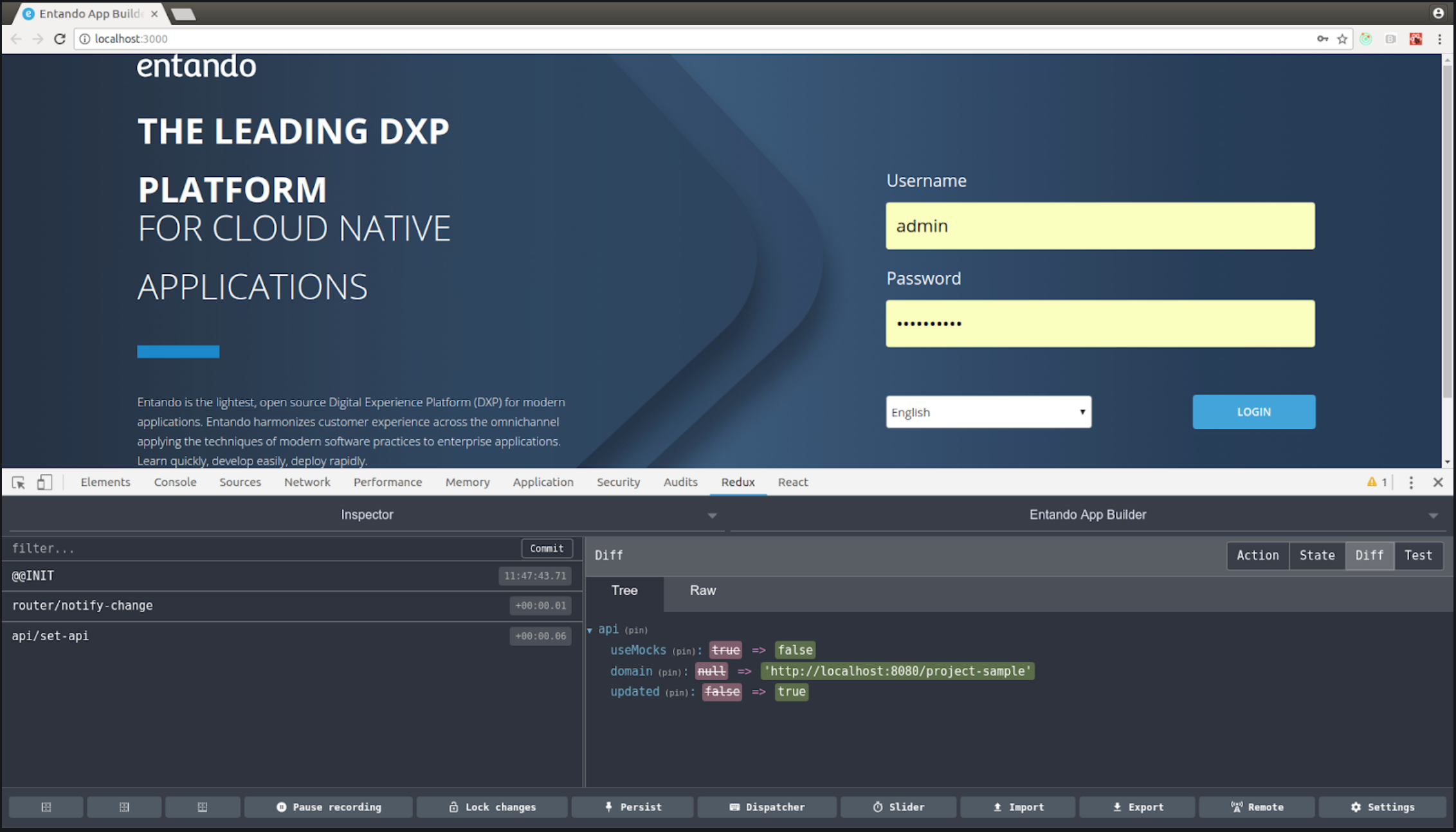

1.2. INSTALLING ENTANDO APP BUILDER 5.0

1.2.1. PREREQUISITES

1.2.2. LAUNCHING THE APP BUILDER

This section provides several options for launching the App Builder which is used to manage a separately running Mapp Engine instance. The App Builder is completely stateless and relies on the Engine to store the application configuration. If no Mapp engine is specified, the App Builder will default to providing mock data so that the functionality can be demonstrated.

BUILDING FROM SOURCE

Prerequisites:

-

git

-

npm

-

node

CLONE AND SETUP

Enter the following commands in your command line:

-

git clone https://github.com/entando/app-builder.git -

cd app-builder-

It is recommended to checkout the release branch corresponding to the version of the Mapp Engine

-

e.g.,

git checkout v5.0.0-release -

Available release branches can be listed with:

git branch -a

-

-

npm install -

npm start— Starts the application in dev mode (local web server).

|

The npm install command installs npm dependencies inside the node_modules folder |

This will give you a running app-builder instance using mock data. The Configuration section below covers connecting your App Builder to a running MApp engine.

CONFIGURATION

There are two methods for using the App Builder, depending on whether the API calls will be to the live Entando Core or if they will use mock data. You have to join with the configurable properties.**

-

USE_MOCKS (boolean): a Boolean setting that determines if the App Builder uses a live connection to the Entando Core or uses mock data. A value of "true" instructs the App Builder to use mock data. A value of "false" allows the App Builder’s APIs to make live data calls to the Entando Core.

-

DOMAIN (string): a string representing the domain name of the Entando Core instance. The protocol is optional and you can specify a subdirectory of the domain. Trailing slashes are invalid and it only validates up to third-level domains.

If you set USE_MOCKS to "false," you must run an Entando Core (MApp Engine) instance and then to connect the App Builder to the MApp Engine using the following steps:

-

Run the MApp Engine instance. See Running the MApp Engine section for more information.

-

Connect the MApp Engine.

If you set USE_MOCKS to "true," you do not need to run the App Builder instance because you are only using mock data.

To connect the MApp Engine

-

Create a file at the root of the app-builder called .env.development.local.

-

Add 'USE_MOCKS=false'

-

Add DOMAIN=<url of running MApp Engine>

-

Run

npm startin your App Builder.

Additional Commands

npm run lint-

Runs the linter to check code style. It fails if linting rules are not matched.

npm run coverage-

Runs unit tests. It fails if a unit test fails, or if the minimum coverage threshold is not met.

npm run import-plugins-

Compiles and imports Entando plugins.

npm run build-

Compiles the project and creates the a production build

npm run build-full-

Runs npm run lint, npm run coverage, npm run import-plugins and npm run build

>>>>>>>>ADD CONFIGURING BPM HERE FOR RH VERSION<<<<<<<<<<

1.2.3. RUNNING THE MAPP ENGINE INSTANCE

CREATING A NEW ENTANDO PROJECT

Set up an Entando application via a Maven archetype. The archetype creates the project and structure needed to develop and add to an application.

Steps:

-

Open your command line and navigate to the directory that will house your new project.

-

Run the following command:

mvn archetype:generate -Dfilter=entando-archetype-webapp-generic -

Select Option 1, displayed as follows:

1: remote > org.entando.entando:entando-archetype-webapp-generic (Generic web app Archetype for Entando: an agile, modern and user-centric open source web app like platform.) -

If prompted for a version, select 5.0.0.

-

Enter values for groupId, artifactId, version, and package. These values go into the Maven setup in the new project. See the Maven documentation for more information on these values. https://maven.apache.org/guides/mini/guide-naming-conventions.html

-

Open the directory created by the Maven archetype in Step 2. The directory will have the same name as the value you entered for artifactId in Step 5. This directory contains all of the structure necessary to start the Entando MApp-Engine and execute the application. See Launching the Application section below for instructions on starting the newly created project.

LAUNCHING THE MAPP ENGINE

QUICK START USING DERBY AND JETTY

Once you have created an Entando application, you can launch it using Jetty and an embedded Derby database.

To do so, run the following command inside your project:

mvn clean jetty:run

Once started the logs will print the following messages:

[INFO] Started SelectChannelConnector@0.0.0.0:8080

[INFO] Started Jetty Server

[INFO] Starting scanner at interval of 5 seconds

The logs will indicate that your application is running. To launch your application, point your browser to your app name

1.2.4. BUILDING THE MAPP ENGINE FROM SOURCE CODE

To download the latest source code:

-

Open your terminal and create an empty directory for your project:

mkdir ~/my_new_project -

Move to the new directory:

cd ~/my_new_project -

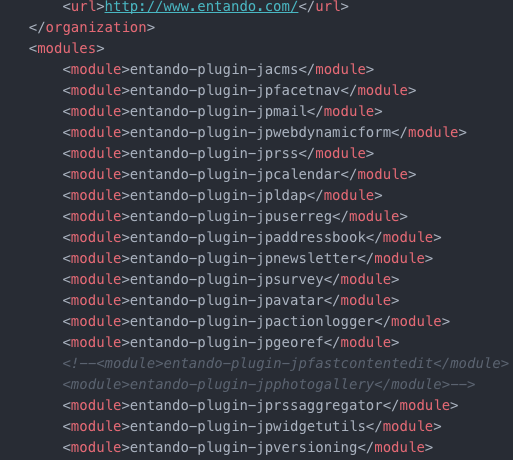

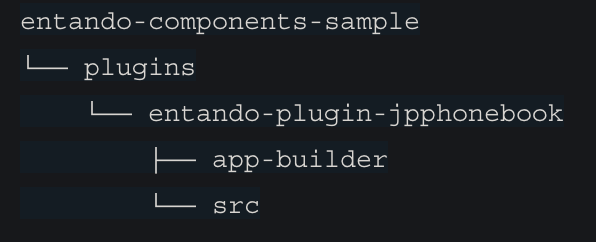

Clone the following repositories IN ORDER: entando-core, entando-components, entando-archetypes, entando-ux-packages projects:

-

Entando-core:

git clone https://github.com/entando/entando-core -

Entando-components:

git clone https://github.com/entando/entando-components -

Entando-archetypes:

git clone https://github.com/entando/entando-archetypes

-

-

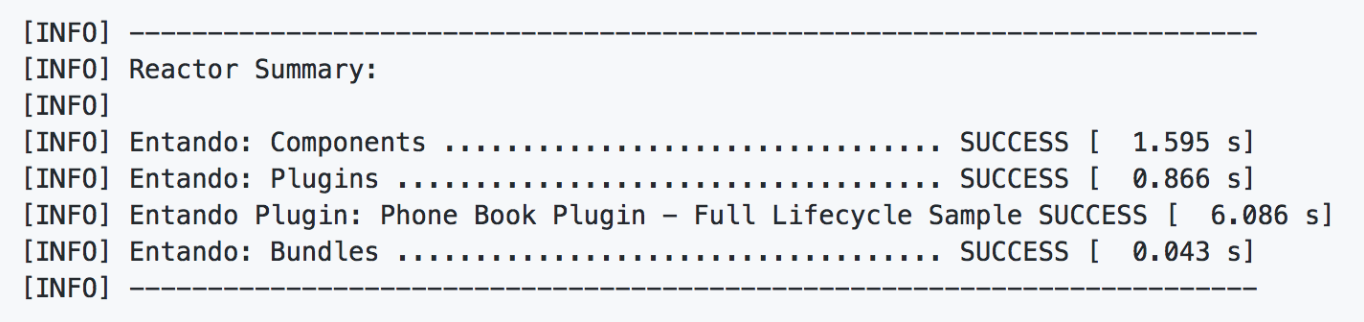

Install, IN ORDER, the entando-core, entando-components, entando-archetypes projects:

-

cd entando-core

mvn clean install -DskipTests -

cd entando-components

mvn clean install -DskipTests -

cd entando-archetypes

mvn clean install -DskipTests

-

-

Complete the download by following the steps from the Creating a New Entando Project section.

At this point you have installed all of the dependencies required to build a new MApp Engine instance.

|

The command to use the artifacts you have installed locally with an additional switch on the archetype command to use local values only is: |

1.2.5. SETTING UP A DATABASE (OPTIONAL)

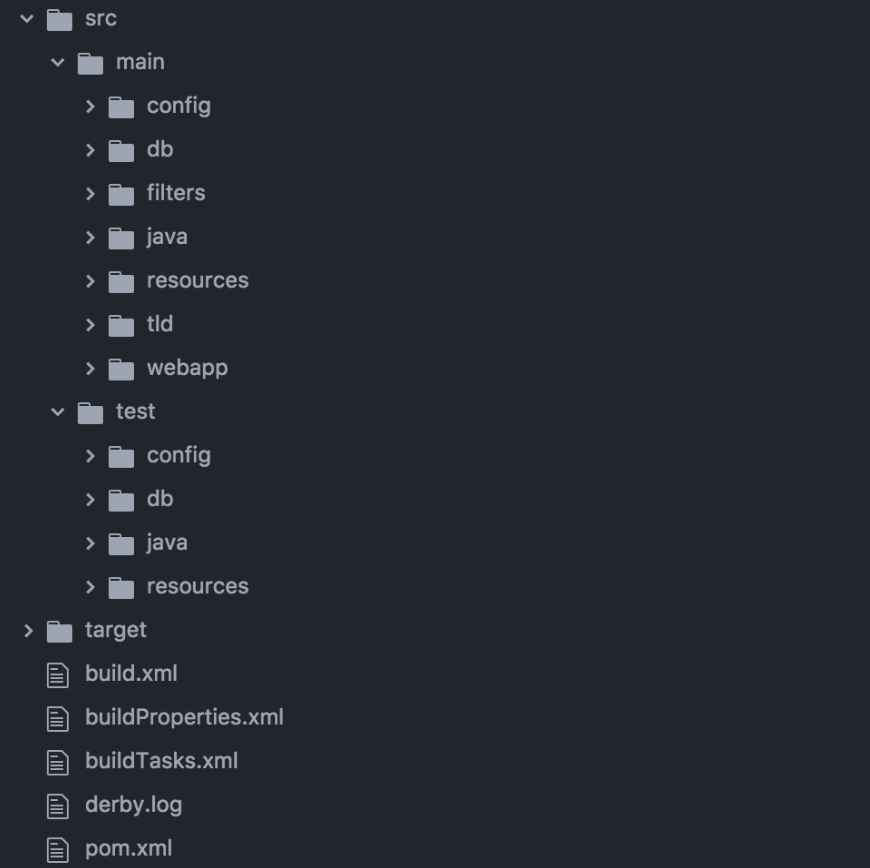

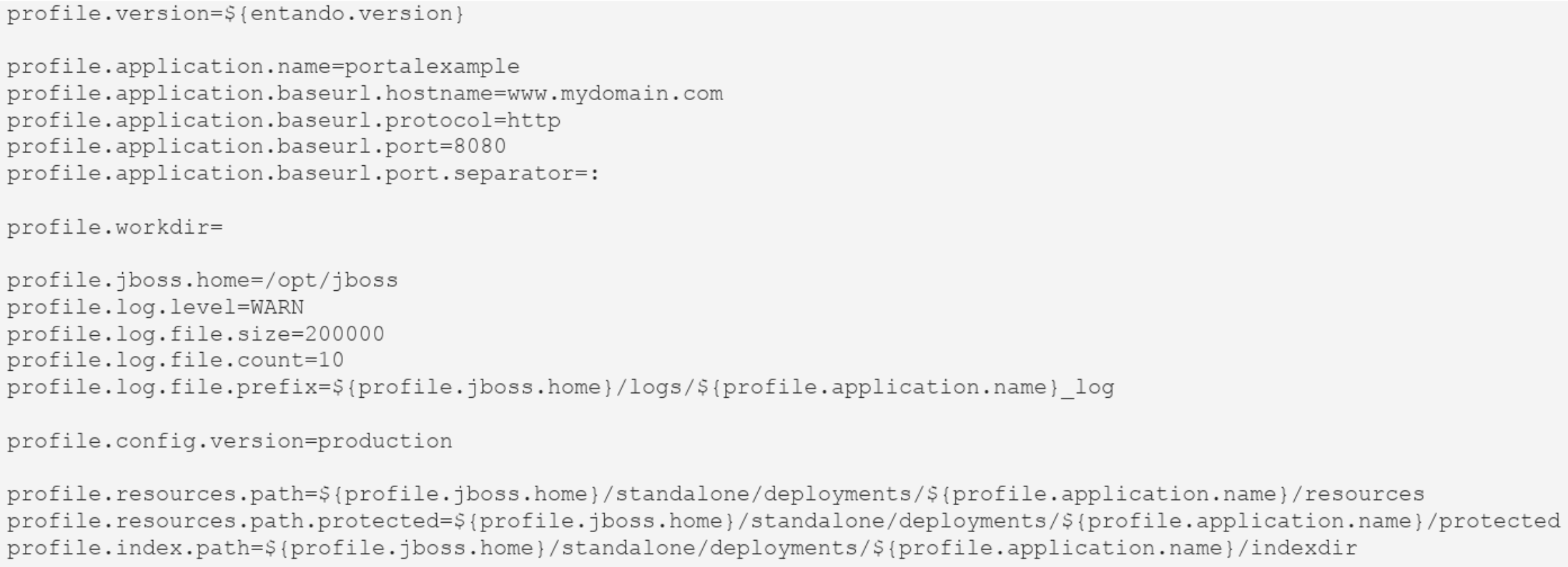

You can configure a newly created Entando application to connect to a database as its backing store. Derby is the default configuration in archetype-created applications, but you can change the default to a different value. Open the Filter Properties file in src/main/filters and enter the appropriate environment and database configuration.

Create two databases in your dbms.

To connect the MApp Engine to a database server:

-

In your database server, create a user for the application.

-

Create two databases. Give the user from Step 1 permission to create, read, and write. For the quickstart, you should name the databases as follows:

<your-project-name>Port <your-project-name>Serv

|

These are the default names included in the default properties files. You can adjust the names and then change the connection information in the project’s configuration filters in src/main/filters. |

-

You also need to uncomment the maven dependency for the database that your are using from the pom.xml file. For example for MySQL you would uncomment:

<dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>5.1.18</version> </dependency> -

Update the appropriate Filter Properties file in src/main/filters to use the configuration for the database properties. For example, on a MacOS, you would update filter-development-unix.properties.

-

Set the user, database, and password for the values created in Steps 1 and 2.

-

Launch the application with

mvn jetty:run

|

When launching with the |

To use the Docker S2I images, you must have already set up a database. See the Setting up a Database section for details.

By default, the app deployed in Docker will connect to a Postgres database to persist resources created using the App Builder and by the MApp Engine. In the app created from the archetype, update the properties in: <your application>/.s2i/environment to point to the user and databases created in the Setting up a Database section.

After configuring the database:

-

Pull in the Docker image using the following command:

docker pull entando/builder-s2i -

Build the image using S2I using the command to build and deploy a Docker app in Docker:

s2i build <path or URL of your project> entando/builder-s2i <your image name>

Where:-

<path or URL of your project> is the path to your project or a URL to access the code. The build for this project will be invoked and the resulting war file deployed to the app server in the image

-

entando/builder-s2i is the name of the base S2I docker image provided by Entando

-

<your image name> is the name for this docker image

-

Run the Image:

To start running the image, run the following command:

docker run -d -p 8080:8080 <your image name>

The app may take some time to start. It is downloading maven dependencies at startup. Your app will be available on http://localhost:8080/your_project_name after startup is complete.

USING DOCKER

Full Stack Example Images

The project below includes scripts and example images that will boot up a full stack of Entando apps in Docker. https://github.com/entando/entando-ops/tree/master/Docker/Production/entando-full-stack

These projects are useful to quickly boot up an Entando environment in Docker. Can be forked/cloned for extension and updates as needed as well. Scripts and image names can be seen in the repository

Base Image

This base image provides a base Dockerfile that can be extended to create and manage an Entando application. The base image downloads all of the maven dependencies required for an application to run so the initial run can take some time however after that edits to a child Dockerfile will run fast. The base example in the Readme uses a jetty/derby combination for execution

https://github.com/entando/entando-ops/tree/master/Docker/Production/builder-images/docker-builder-5

Using S2I to Build Docker Images

To use the Docker S2I images, you must have already set up a database. See the Setting up a Database section for details.

By default, the app deployed in Docker will connect to a Postgres database to persist resources created using the App Builder and by the MApp Engine. In the app created from the archetype, update the properties in: <your application>/.s2i/environment to point to the user and databases created in the Setting up a Database section.

After configuring the database:

-

Pull in the Docker image using the following command:

docker pull entando/builder-s2i(Demo image using Jetty) -

Build the image using S2I using the command to build and deploy a Docker app in Docker:

s2i build <path or URL of your project> entando/builder-s2i <your image name>

Where:-

<path or URL of your project> is the path to your project or a URL to access the code. The build for this project will be invoked and the resulting war file deployed to the app server in the image

-

entando/builder-s2i is the name of the base S2I docker image provided by Entando

-

<your image name> is the name for this docker image

-

Run the Image

docker run -d -p 8080:8080 <your image name>

The app may take some time to start. It is downloading maven dependencies at startup. Your app will be available on http://localhost:8080/<your_project_name>; after startup is complete.

By default the image exposes port 8080 however you can change the local port mapped to that value by editing the first instance of 8080 in the docker run command above.

1.3. APP BUILDING WITH ENTANDO

1.3.1. INTRODUCING THE ENTANDO APP BUILDER

The App Builder is Entando’s application to help you integrate, configure and customize any kind of modern web application, regardless of your programming proficiency. The App Builder provides an Admin Console, a visual environment, that collects elements for configuring your web application and managing your activities.

The App Builder is a ReactJS-based application that uses REST APIs to communicate with the Entando Core.

This document describes the elements and functionalities of the Admin Console.

ENTANDO ADMIN CONSOLE VERSION 5.0

With Entando version 5.0 the Admin Console has been redesigned and renewed with the adoption of the PatternFly framework and guidelines. PatternFly is built on top of Bootstrap3 and provides an enterprise grade UX look and feel based on research and UX best practices.

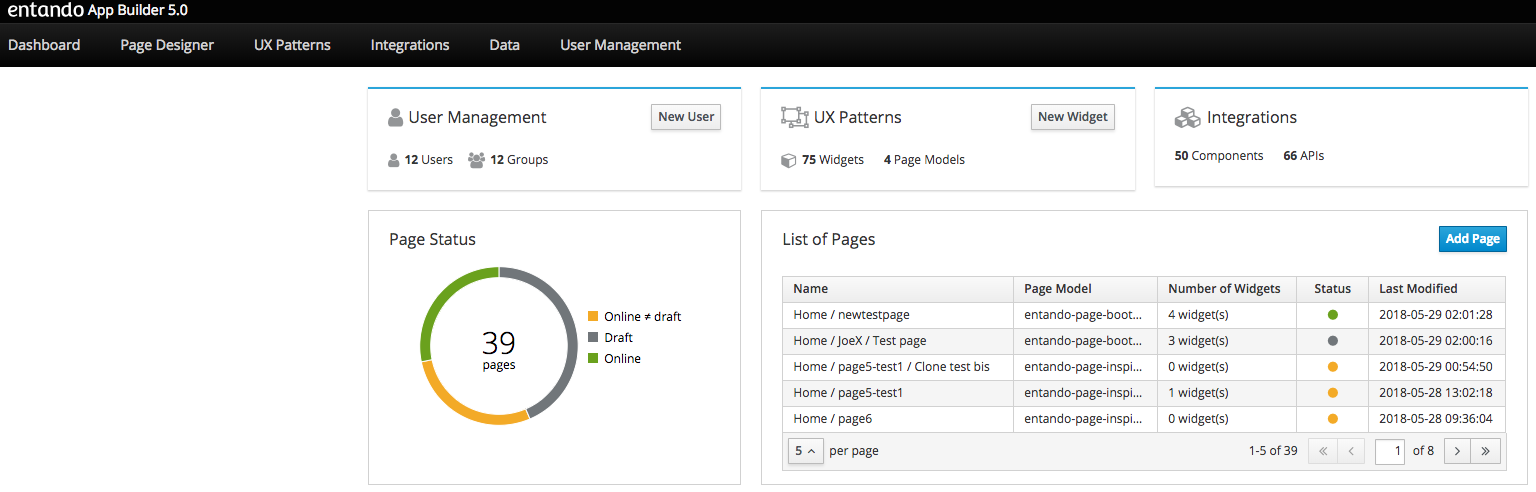

1.3.2. APP BUILDER OVERVIEW

The Entando main view is the entry point for every administration activity. On the up side of the screen there is an horizontal menu, which gives access to all Entando’s functionalities, while on the down-side of the screen, the Dashboard provides an overview of Entando status and a quick access to some functionalities.

1.3.3. DASHBOARD

The dashboard is divided into distinct areas.

-

Page Status (gives an overview of the status of the pages: Online, Online /= Draft and Draft)

-

List of Pages (gives an overview of the list of all Pages: Name, configured Page Model, Number of configured Widgets, Status, Last Modified)

-

Add Page (possibility to add a new Page)

-

User Management (quick access to Users list and Group list)

-

Add User (possibility to add a new User)

-

UX Patterns (gives an overview of the number of configured Widgets and Page Models)

-

Add Widget (possibility to add a new Widget)

-

Integrations (gives an overview of the number of integrated components and APIs)

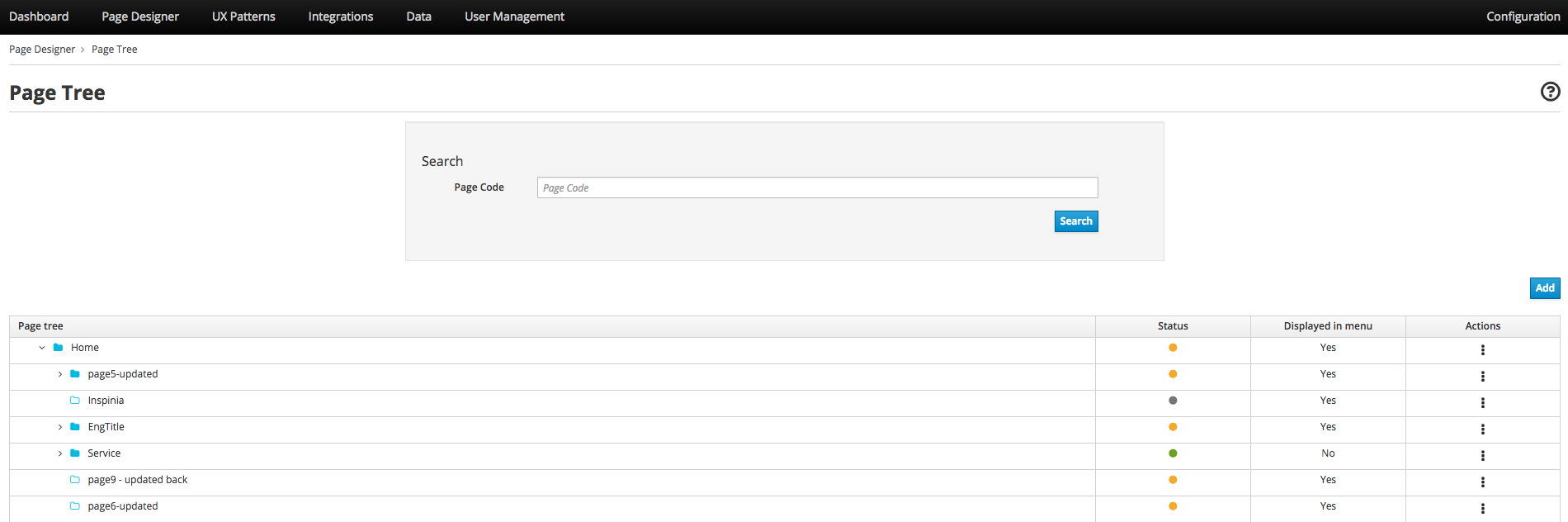

1.3.4. CREATING AND MANAGING PAGES WITH PAGE DESIGNER

The Page Designer section deals with all page management-related activities.

-

Page Tree

-

Page Configuration

-

Page Settings

USING THE PAGE TREE

The Page Tree subsection shows the hierarchical structure of the pages and their statuses, and lets you perform all page management actions.

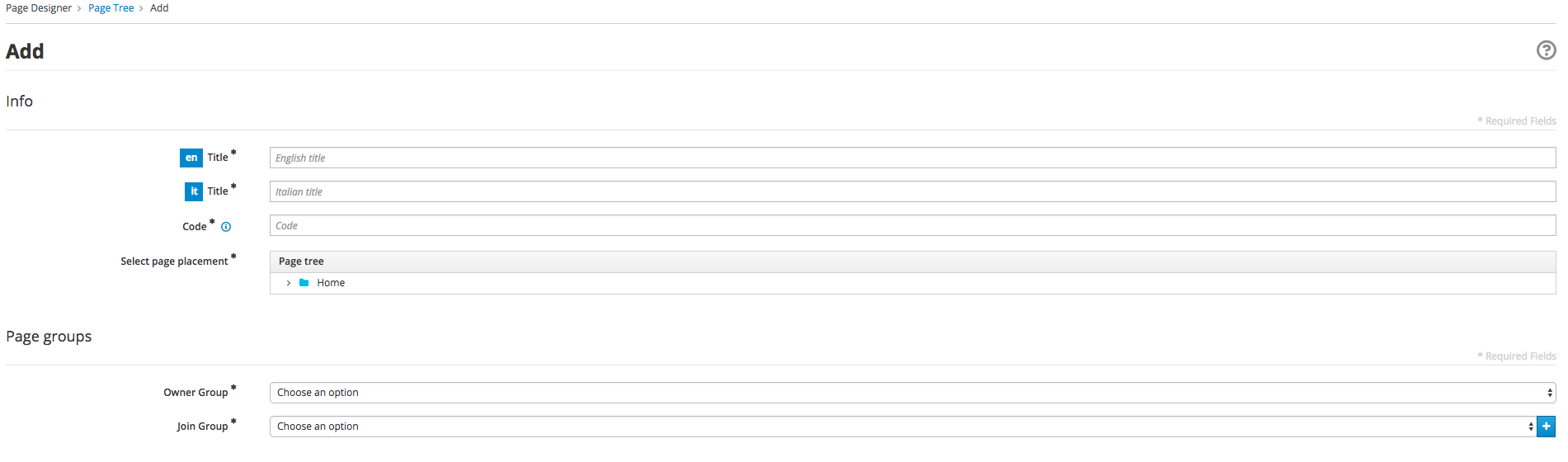

CREATING A NEW PAGE

-

From the tabs along the time, choose the Page Designer > Page Tree > Add.

-

Enter a name in both the English and Italian fields.

-

(Optional) Enter a code. By default, Entando generates a code similar to the page title, but you can manually enter a different code. The field accepts alphanumeric characters and underscores, with a character limit of 30.

Once you save the page, you will no longer be able to edit the page’s Code field.

-

Select an Owner Group and a Join Group from the drop-down menus in the Page groups section. Owner Group members can administer the page. Join Group members can view the page.

-

Select from the Page Tree section the page or folder that will be the parent of the new page.

-

Select a Page Model from the Page Model drop-down menu. The Page Model determines the layout of your new page. See the Using Page Models section below.

-

Set additional options from the Settings section as needed.

-

Displayed in menu: toggles the page’s presence in the menu on or off.

-

SEO: sets toggles on or off optimization of the page for Search Engine Optimization (SEO)

-

Charset: specifies the character encoding to use. Selectable between utf8 and iso-88591

-

Mime Type: identifies the type of content on the page

-

-

After you have made your selections, click Save and Configure to open the Configuration section. Learn more in the Configuring a Page section below.

-

Open the Page Tree and find the page to edit.

-

Click the Actions (three vertical dots) button in line with the page and select "Edit."

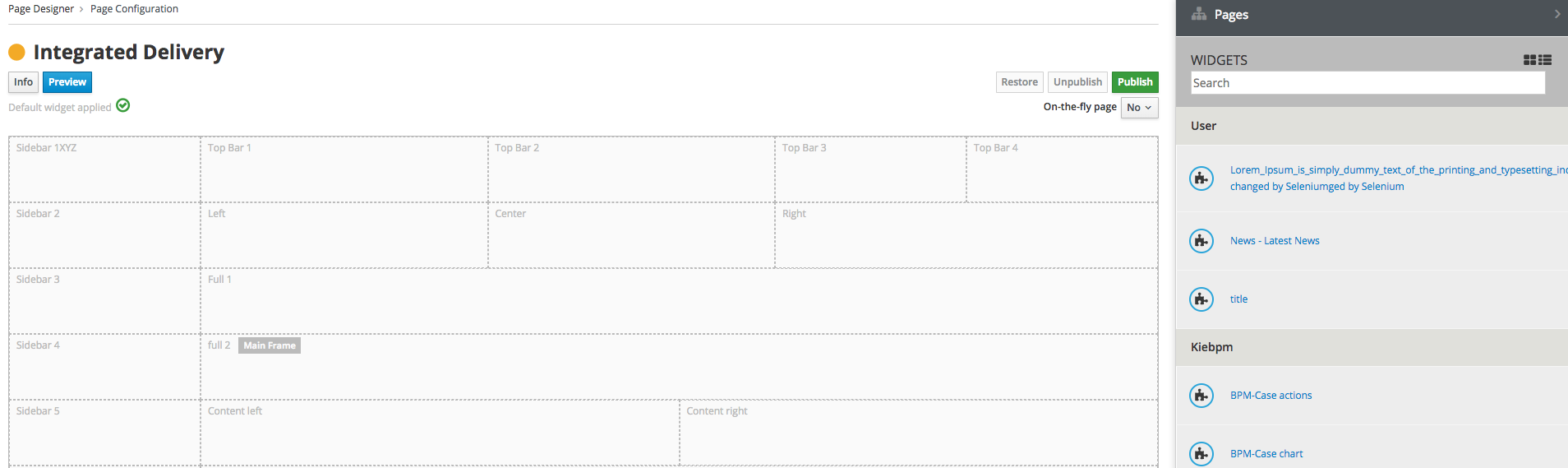

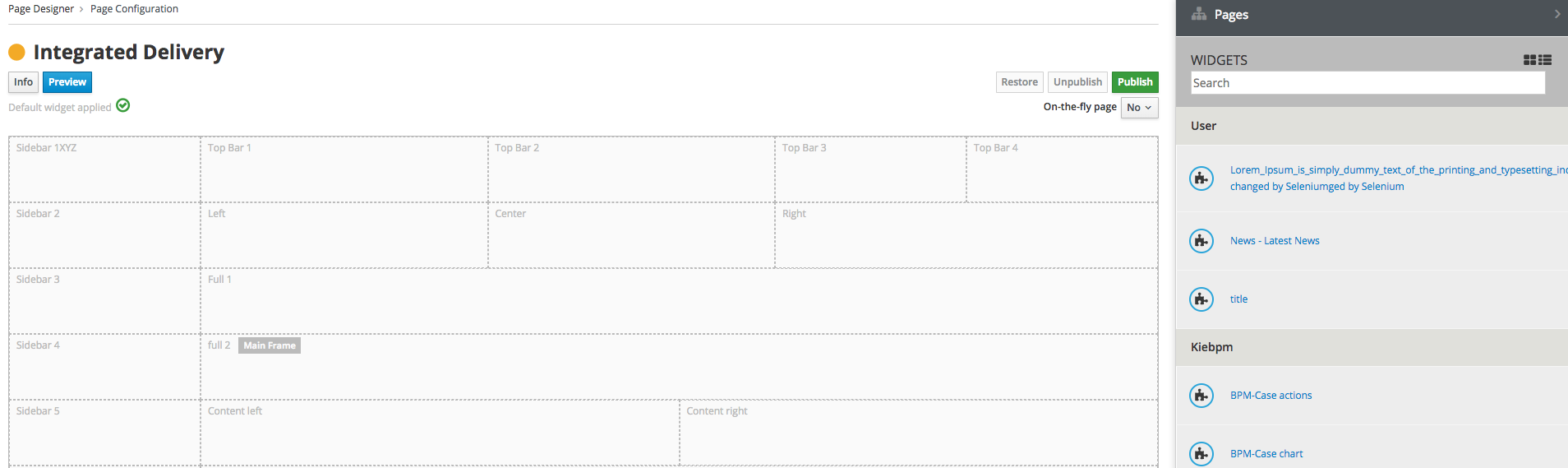

CONFIGURING A PAGE

The Page Configuration section is where you assign widgets to the frames defined by the Page Model you applied to your page. The available widgets are in the right pane. Apply widgets by dragging and dropping them to a frame of your choice.

This is also where you publish or unpublish the page or preview the layout prior to publishing.

Click the Info button for a summary of options you assigned when creating the page, e.g., Title and Owner Group.

1.3.5. UX PATTERNS: MANAGING WIDGETS, PAGE MODELS, AND FRAGMENTS

The UX Patterns section provides settings options for your general UX Patterns.

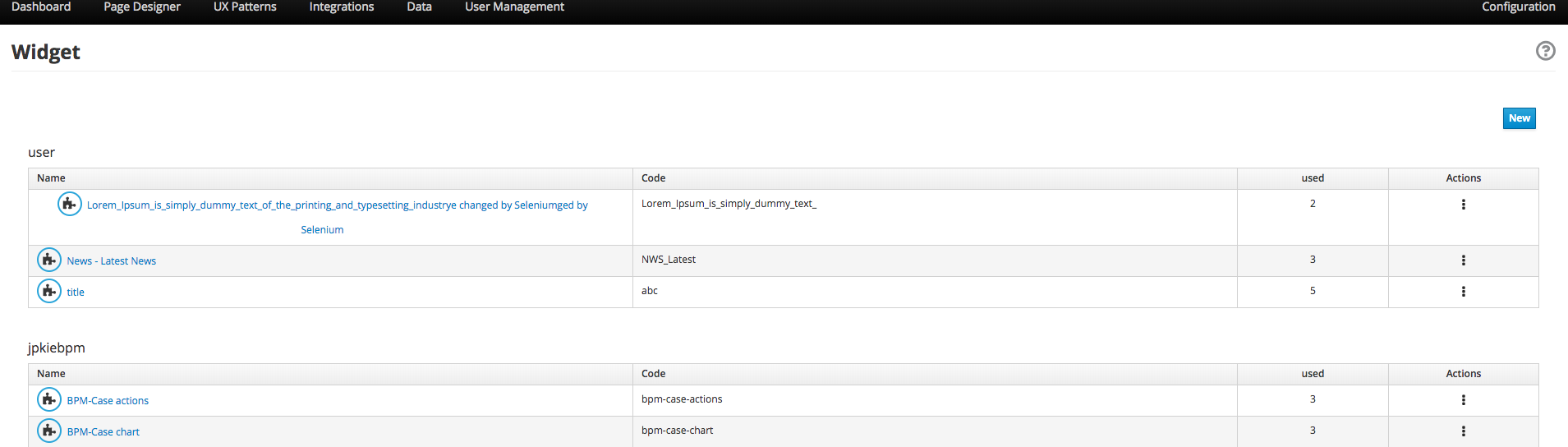

USING WIDGETS

A Widget is an independent software component configured and executed within your application’s page. Widgets provide UX/UI blocks that deliver a specific function.

-

User Widgets: user-generated widgets created via the Admin Console

-

Custom Widgets: widgets designed to meet specific applications needs such as Red Hat PAM widgets

-

Stock Widgets: default widgets provided by the App Builder to perform common tasks

-

Specific Application Widgets: widgets that apply only to a specific tool in Entando, e.g., the installation of CMS module provides Content Management related widgets

-

Plugins Widgets: widgets provided by the installed plugins

|

Each widget’s Action menu differs depending on the type, including no available actions at all. |

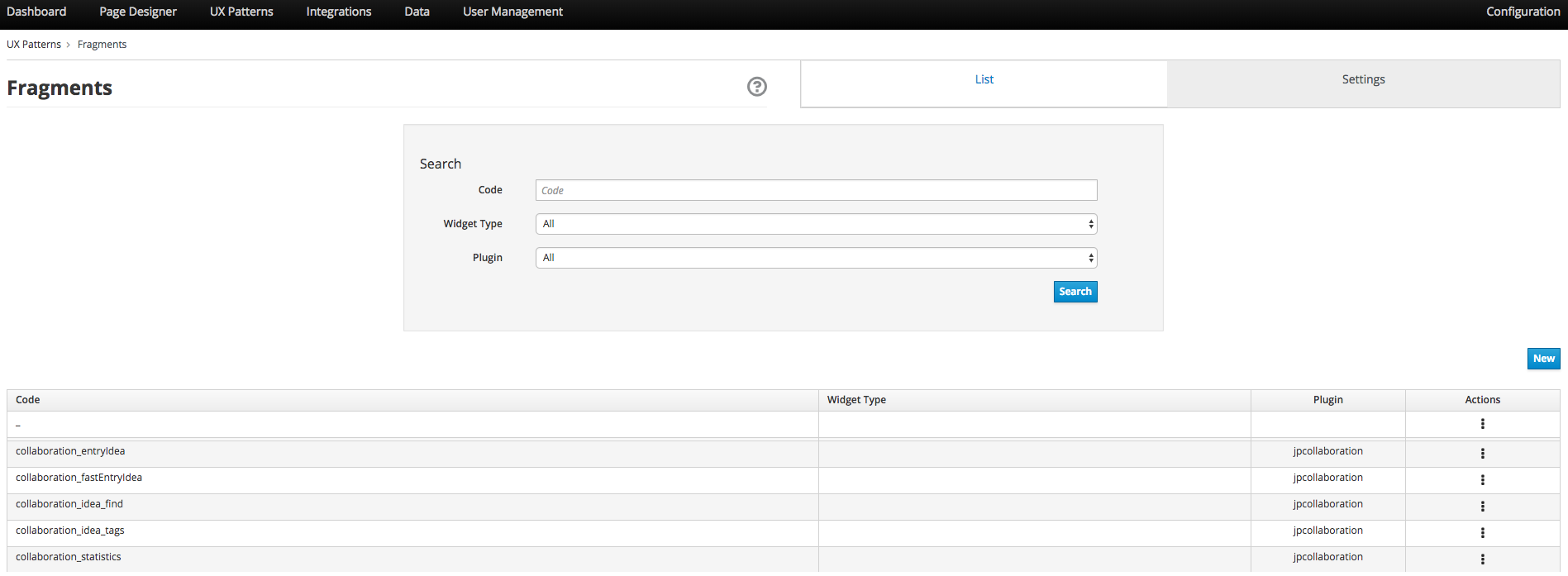

USING FRAGMENTS

Fragments are single portions of front-end interface used in widgets (including plugins) and Page Models. They are are HTML blocks that include Freemarker instructions by using specific tags that you replace with appropriate values each time you use the fragment. Entando then renders the widgets using the fragments. The FRAGMENTS section lets you manage existing fragments or add new ones.

ADDING A NEW FRAGMENT

-

From the tabs along the top, choose the UX Patters tab > Fragments > New.

-

Enter a Code value. This value represents the fragment’s unique identifier and appears in the Name column of the Fragments table.

Once you save the fragment, you will no longer be able to edit its Code field.

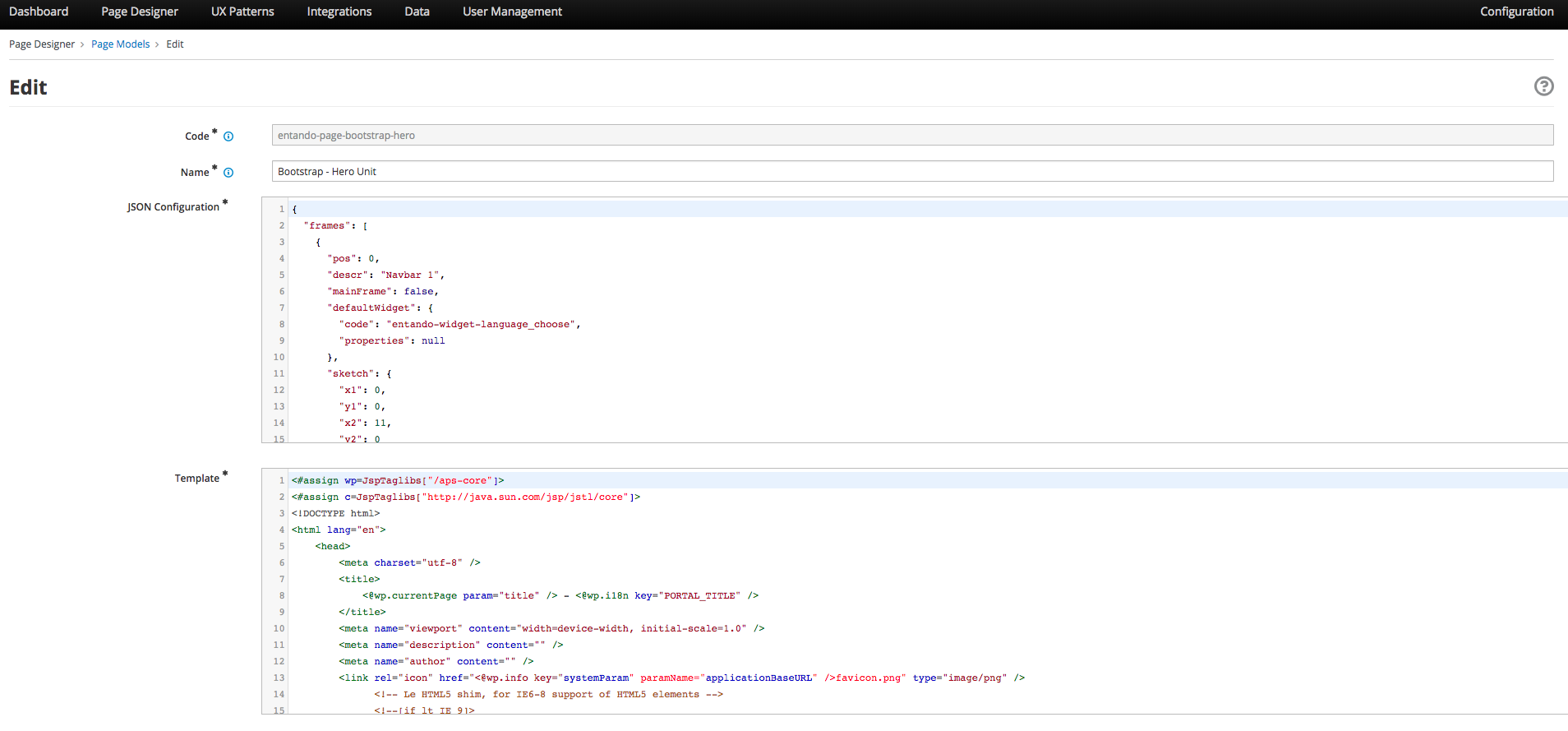

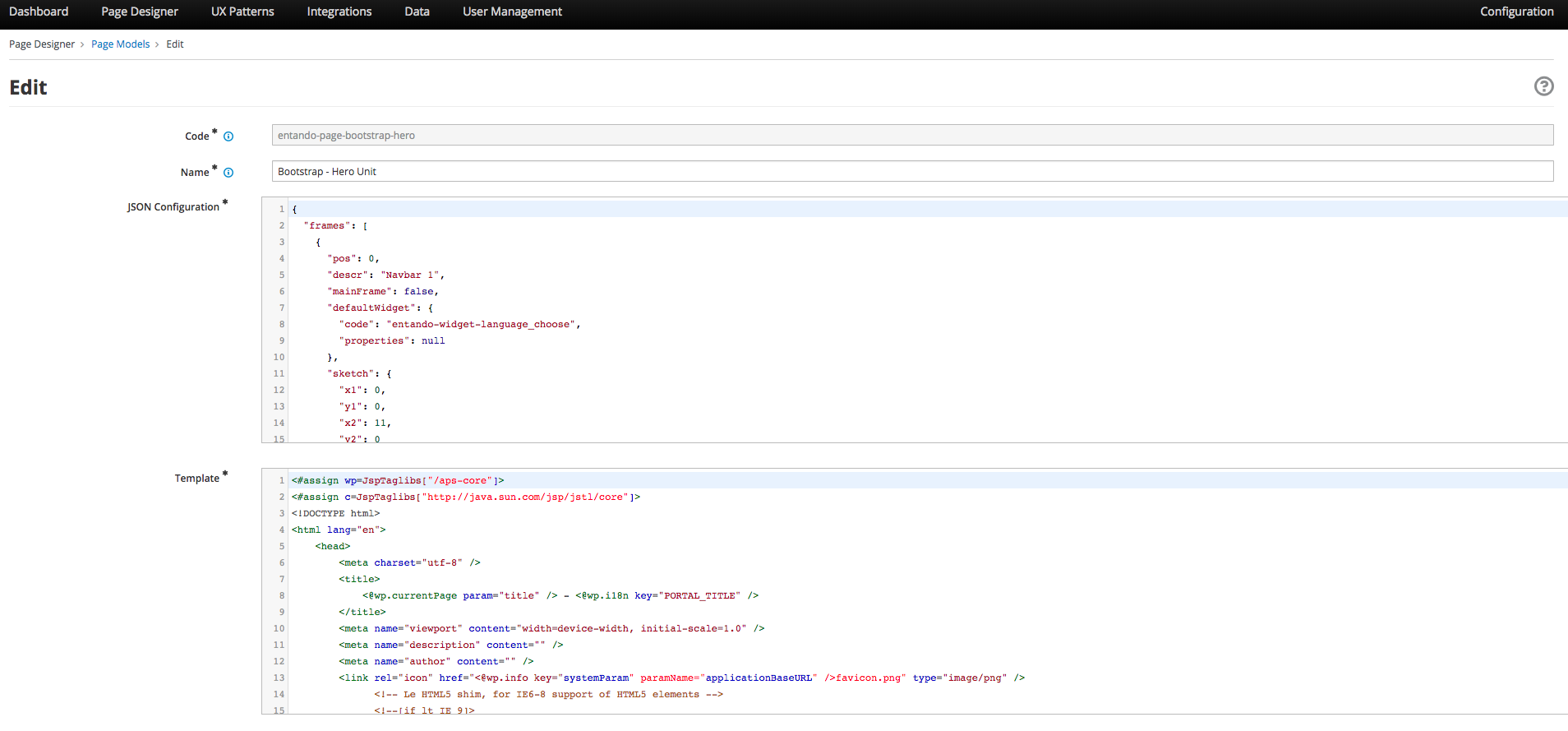

USING PAGE MODELS

The Page Model is the look and feel of an application’s page. It defines the structure of the page using frames or areas of the page that contain widgets.

-

JSON Configuration: formatted text that defines the frame structure of the page.

-

Template: the look and feel and the real layout of the page. In other words, the template defines where to show a frame and how it will be rendered. Templates use Velocity Template Language (VTL) to provide an simple and clean way to incorporate dynamic content into a web page. It supports statements and directives, references and comments, access to exposed java objects, and methods.

CREATING A PAGE MODEL

-

From the tabs along the top, select UX Patterns > Page Models > Add.

-

Assign the page model a code and a name. Codes represent the page model’s unique identifier. The name can be more human readable. For example:

Code: entando_page_purple

Name: Purple - BPM -

In the JSON Configuration field, enter JSON content to structure the page. For example:

{ "frames": [ { "pos": 0, "descr": "Top bar 1", "mainFrame": false, "defaultWidget": null, "sketch": { "x1": 0, "y1": 0, "x2": 2, "y2": 0 } } ] }The JSON content must be a single object defining "frames." In other words, the content you enter must go within the

frames: []object.See the Getting Started with Entando App Builder document for a complete example.

-

In the Template field, enter XML formatting to determine where each defined frame belongs and how to render it. See for a complete example.

-

When finished, click Save.

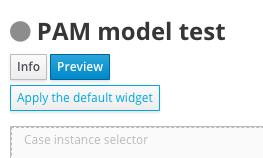

USING THE "INSPINIA - BPM CASE MANAGEMENT WIDGETS" PAGE MODEL

The Inspinia - BPM Case Management Widgets page model is a pre-designed model that displays all Process Application Management (PAM) case management widgets on a single page. The list of widgets that this page model deploys is as follows:

-

Case selector

-

Case details

-

Case progress status

-

Case roles

-

Case comments

-

Case instance diagram

-

BPM case charts

-

Case instance file

-

Select Inspinia - BPM Case Management widgets when declaring the page model for your page.

-

Click Save and Configure when you have set all options for your page.

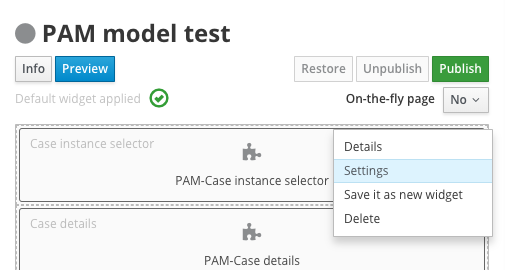

-

On the resulting screen, click Apply the default widget.

-

After the page model loads, select the Actions menu from the top frame titled "PAM - Case instance selector" and select "Settings."

-

Select the relevant Knowledge Source and Deployment Unit and click the orange button if you changed any options.

-

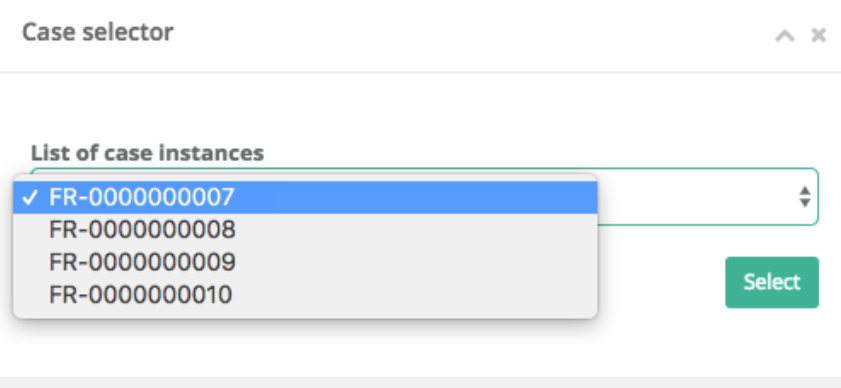

Click Save and view the page.

-

Select a case from the list of cases and click Select to populate all widgets with data from your selected case.

For more information on PAM integrations, see the Integrating with a Process Application Manager section.

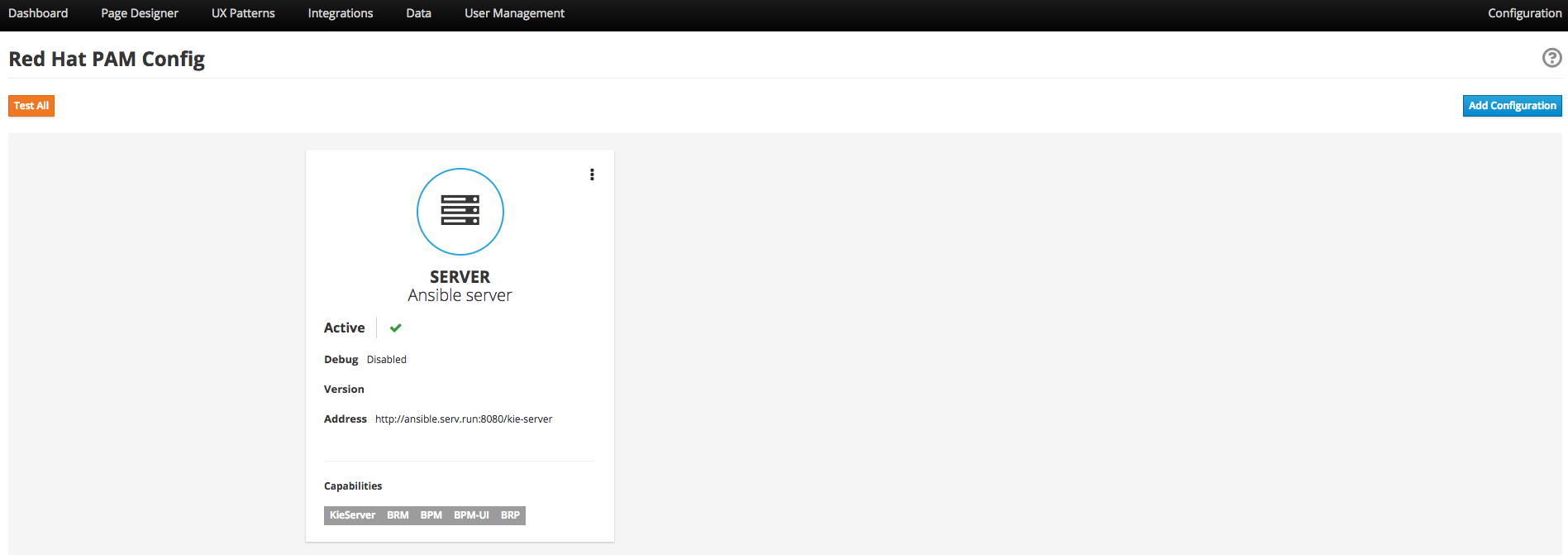

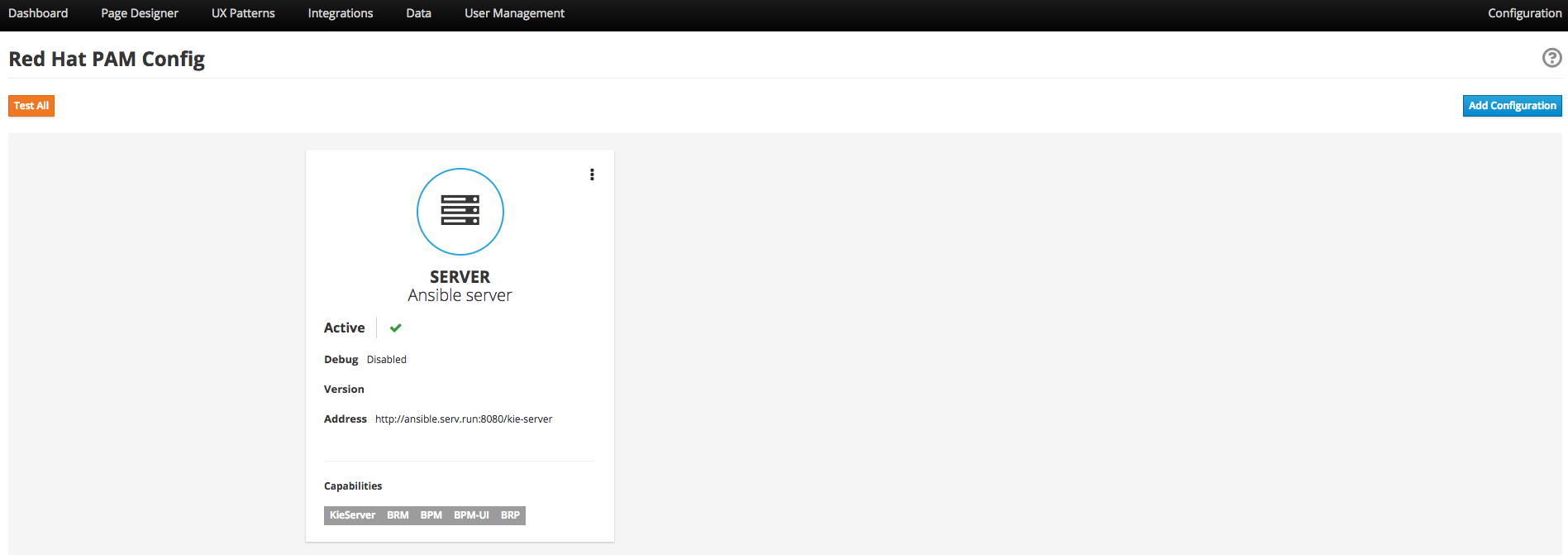

1.3.6. INTEGRATING WITH RED HAT PROCESS AUTOMATION MANAGER (PAM)

Entando integrates with Red Hat’s Process Automation Manager (formerly Red Hat JBoss BPM Suite), a middleware platform for creating cloud-native business automation applications and microservices. It enables enterprise business and IT users to document, simulate, manage, automate and monitor business processes and policies. It is designed to empower business and IT users to collaborate more effectively, so business applications can be changed more easily and quickly.

-

From the tabs along the top, choose Integrations > Red Hat BPM Config > Add Integration.

-

In the Connection section, add all server information. For example:

-

General Settings section:

-

Active: On

-

(Optional) Debug: On

-

-

Connection section:

-

Name: Ansible Server

-

Host name: ansible.serv.run

-

Schema: http

-

Port: 8080

-

Webapp name: kie-server

-

Username: username for the process server administrator

-

Password: password for the process server administrator

-

Conn. Timeout (in millis): 500

-

-

-

Click Test Connection to verify a valid the configuration, then click Save.

-

Open the Page Tree by choosing the Page Designer tab > Page Tree.

-

Find your new page in the Page Tree and click its More Options button > Configure.

-

In the right pane, search for the “BPM-form by Data Type” widget and drag it to a frame established by your page model.

-

Click the Preview button to verify the correct appearance.

-

Click Publish once your page is complete.

1.3.7. MANAGING USERS

The Entando App Builder assigns access rights using combinations of roles and groups. You can manage all activities and privileges for roles and groups through the User Management tab.

-

Users: perform operations and access information. Users are characterized by a Profile Type that identifies the individual, such as name and email address. The actions that a user can take in an Entando program derive from combinations of Roles and Groups.

-

Roles: assigned sets of permissions, e.g., content editing rights, or user profile editing rights. Roles are mutually independent, meaning one user can be associated with multiple roles.

-

Groups: assigned sets of users, with each user given a role within the group. One user can belong to multiple groups. For example, Lynne is a member of the Content Editing group and has the role of Administrator, giving her the ability to perform any task on pages that the Content Editing group is assigned to. She’s also a member of the Newsletter group with the role of Editor, meaning she can edit content, but does not have full access to the pages the Newsletter group is assigned to.

-

User Restrictions: perform access restrictions on the users, e.g., months availability since the last access, or months validity of the user password, etc.

-

Profiles Types: perform different user’s profile types and creation of the new profile types.

|

For first time projects, consider creating profiles from largest to smallest. For example:

|

WORKING WITH USERS

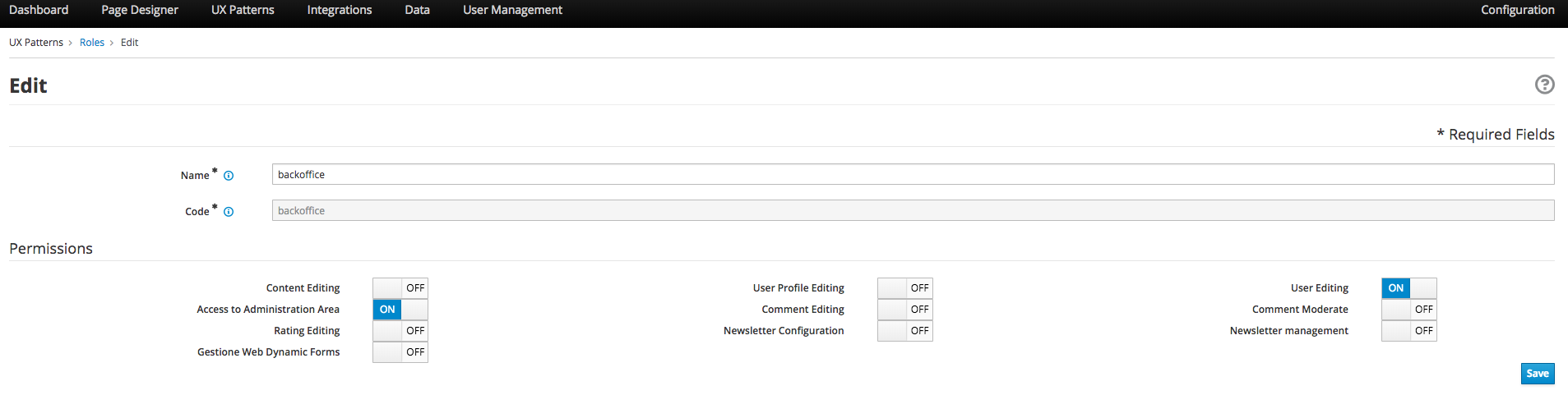

CREATING A ROLE

-

From the tabs along the top, select User Management > Roles > Add.

-

Enter a name.

-

(Optional) Enter a code. The code is the Role’s unique identifier. By default, Entando generates a code similar to the page title, but you can manually enter a different code. The field accepts alphanumeric characters and underscores, with a character limit of 30.

Once you save the role, you will no longer be able to edit the page’s Code field.

-

In the Permissions section, toggle to "On" the access rights all users assigned to this role will possess. For example, public users may have no options toggled while power users may have the "All Permissions" option toggled to On.

-

Click Save. To edit a Role after saving, return to the Roles screen. In the Roles table, select the Actions menu (three vertical dots) for the role to change and select "Edit."

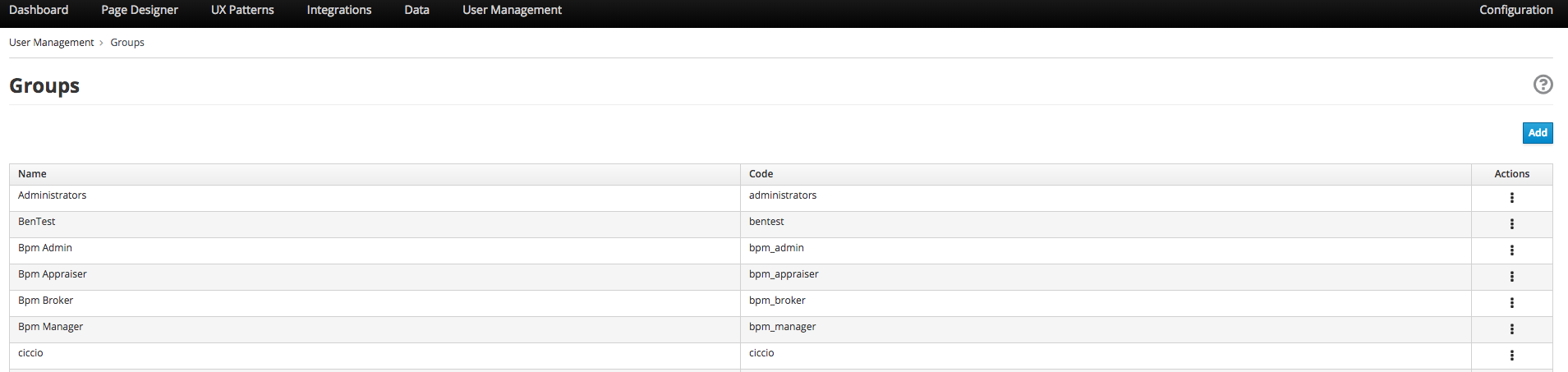

CREATING A GROUP

-

From the tabs along the top, select User Management > Groups > Add.

-

Enter a name.

-

(Optional) Enter a code. The code is the Group’s unique identifier. By default, Entando generates a code similar to the page title, but you can manually enter a different code. The field accepts alphanumeric characters and underscores, with a character limit of 30.

Once you save the group, you will no longer be able to edit the group’s Code field.

To edit a Group after saving, return to the Groups screen. In the Groups table, select the Actions menu (three vertical dots) for the group to change and select "Edit."

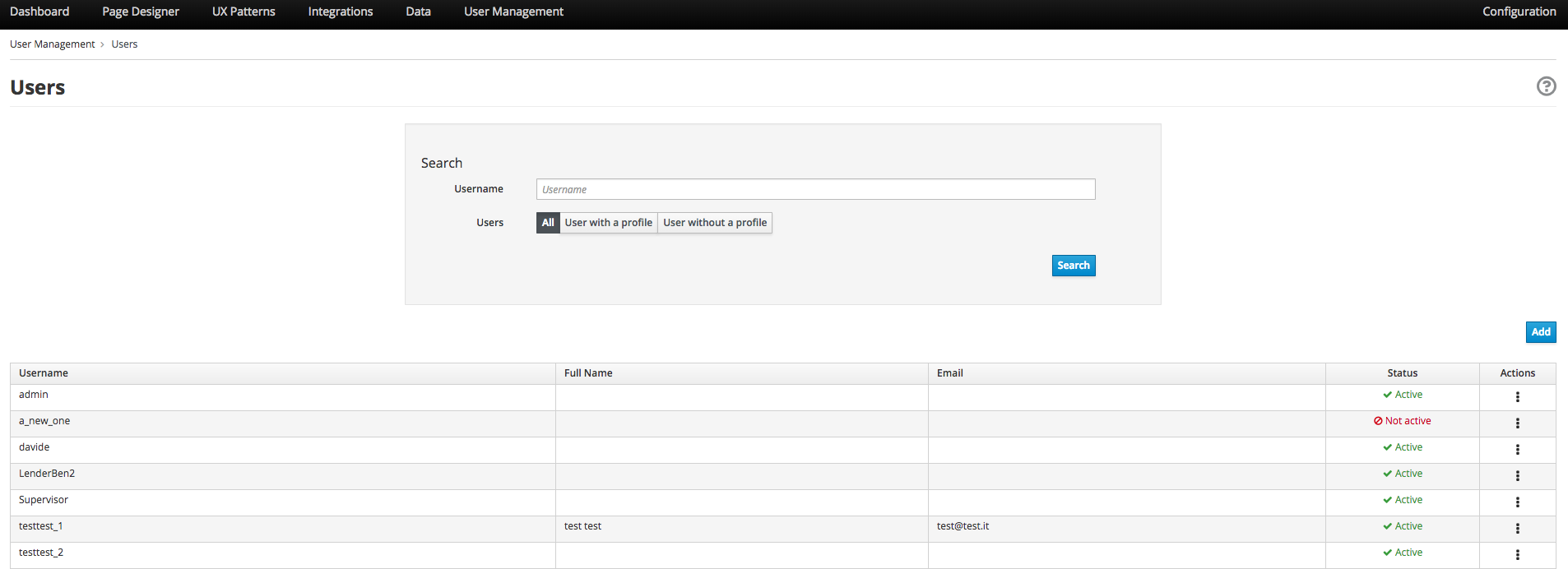

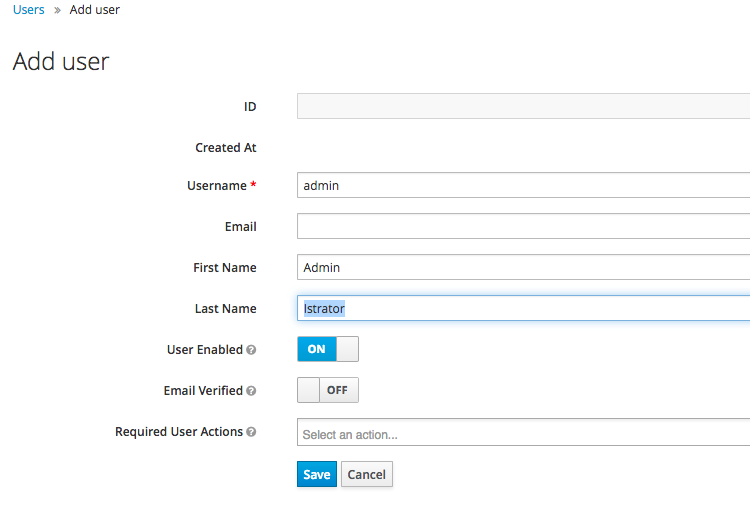

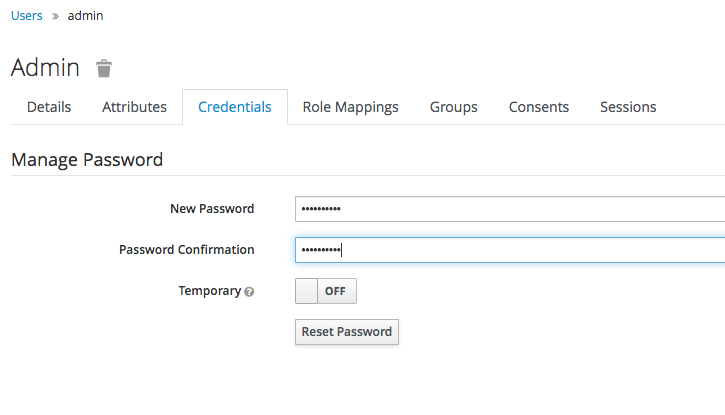

CREATING A USER

-

From the tabs along the top, choose User Management > Users > Add.

-

In the Username field, enter

The username must be between 8-20 characters. You can use numbers, underscores, special characters, and upper or lowercase letters.

-

Enter a password.

-

From the Profile Type drop-down menu, select “Default User Profile.”

-

Toggle the Status to “On” to ensure that individuals can sign in with this user’s credentials.

-

Click Save to create the user and return to the Users screen.

SETTING A USER’S AUTHORIZATIONS

-

From the table on the Users screen, find your new user profile and click More Options > Manage authorizations for: your profile name. Set the following options:

-

User Group: select a group to assign this user to. You can assign a user to as many groups as you want.

-

User Role: select the role this user should have in the group. You can only assign a user to one role per user group.

The User Group/Role pairing will appear in the Authorizations table at the top of the screen.

-

-

Click Add and then click Save.

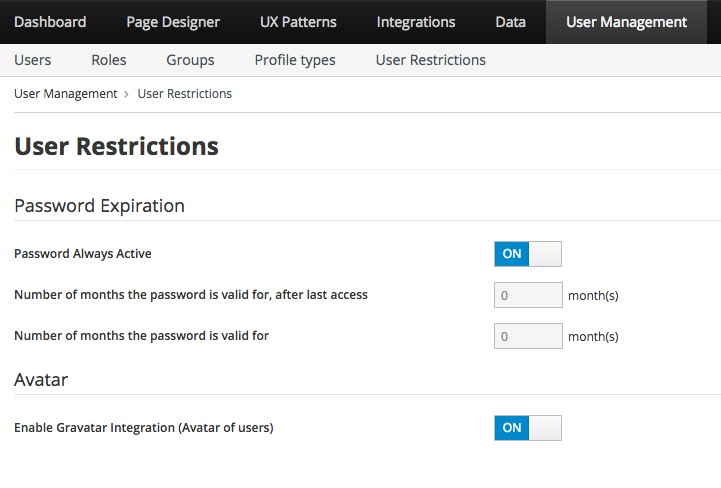

RESTRICTING ACCESS

Entando lets you set restrictions on users (see Figure below), specifically a shelf life for passwords and a maximum time between log ins before the password expires.

-

From the tabs along the top, choose User Management > User Restrictions.

-

Toggle the Password Always Active option.

-

On - the password never expires and users have unlimited time between logins

-

Off - activates the password limitation fields, allowing you to set expirations

-

-

Toggle the Enable Gravatar integration (Avatar of users) option.

-

On - enables management of the user’s avatar

-

Off - disables Gravatar integration

-

1.3.8. PRESENTING DATA

Data Types are collections of attributes. They are used to gather and manage data coming from external sources, like APIs or the IoT. This abstraction layer allows Entando to perform core features like searching on external data.

Data Models are used to agnostically render data coming from data types, ensuring that the same data can be represented differently, such as with forms, tables, or graphs. By separating the definition and presentation of data, the Entando App Builder gives you the ability to display the same data in a context- and user-aware way without requiring a data source or integration.

-

Create a Data Type.

-

Create one or more Data Models per Data Type.

-

Publish the data on web pages with one or more widgets. The Data section allows you to manage the Data.

STRUCTURING WITH DATA TYPES

The Data Types section lists all available Data Types, their attributes, and is where you can create a new data structure.

-

From the tabs along the top, choose Data > Data Type > New.

-

Enter a Code. The code must be three letters.

-

Enter a Name.

-

Click Save. This opens the Attributes section where you specify OGNL validation.

1.3.9. CONFIGURING ENTANDO

The Configuration section (far right of the toolbar, gives you access to Entando general duty functionalities, such as configuring Categories, Labels, Configuration Reload, Database backup, file browsing and groups management.

CATEGORIES

Categories are dividers and parent folders for pages in your Entando page tree. Add a new category by clicking the Add button.

LABELS AND LANGUAGES

The Languages tab displays a list of all configured languages in the system. You can add as many different languages to the system as you need by selecting a language from the Select Language drop down menu and clicking Add. You can then include the appropriate vocabulary for any term in the System Labels section.

The System Labels tab displays system labels that apply to the entire Entando application. By default, system labels are available in English and Italian. If you add additional languages in the Languages tab, you can enter the appropriate word for a label in your choice language.

DATABASE

The Database section lets you back up your entire Entando’s database by clicking the Create Backup button. The backed up files become available from the Protected folder of the File Browser section once the backup process completes.

FILE BROWSER

The File Browser section lets you browse files, upload, create text files and folders, and access database backups from two distinct areas of the file-system:

-

Public: /project_name/src/main/webapp/resources

-

Protected: /project_name/src/main/webapp/protected

|

Backed up database files are in the Protected section by default. |

1.4. GETTING STARTED WITH ENTANDO

1.4.1. SAMPLE MORTGAGE APPLICATION

PREFACE

The Entando App Builder helps you create a variety of applications and integrate them with Red Hat BPM. It uses an intuitive UI, reusable templates, and drag-and-drop functionality that allows you to participate in every stage of the application design process regardless of your level of coding knowledge.

This demonstration walks through creating an applicant page for a mortgage application, and then integrates with Red Hat PAM.

Prerequisites:

-

Entando MApp Engine and App Builder installed

CONFIGURING THE APPLICANT PAGE

The Applicant page is a public-facing webpage.

CREATING A NEW PAGE MODEL

Page models are reusable templates. They determine the page layout via frames into which you can then drag and drop content and widgets.

-

Open the Entando App Builder.

-

From the tabs along the top, select UX Patterns > Page Models > Add.

-

Assign the page model a code and a name. Codes represent the page model’s unique identifier. The name can be more human readable. For example:

Code: entando_page_purple

Name: Purple - BPM -

In the JSON Configuration field, enter JSON content to structure the page. See the following text for an example.

{ "frames": [ { "pos": 0, "descr": "Top bar 1", "mainFrame": false, "defaultWidget": null, "sketch": { "x1": 0, "y1": 0, "x2": 2, "y2": 0 } }, { "pos": 1, "descr": "Top Bar 2", "mainFrame": false, "defaultWidget": null, "sketch": { "x1": 3, "y1": 0, "x2": 5, "y2": 0 } }, { "pos": 2, "descr": "Top Bar 3", "mainFrame": false, "defaultWidget": null, "sketch": { "x1": 6, "y1": 0, "x2": 8, "y2": 0 } }, { "pos": 3, "descr": "Top Bar 4", "mainFrame": false, "defaultWidget": null, "sketch": { "x1": 9, "y1": 0, "x2": 11, "y2": 0 } }, { "pos": 4, "descr": "Central Bar 1", "mainFrame": false, "defaultWidget": null, "sketch": { "x1": 0, "y1": 1, "x2": 11, "y2": 1 } }, { "pos": 5, "descr": "Central Bar mortgage 2", "mainFrame": false, "defaultWidget": null, "sketch": { "x1": 2, "y1": 2, "x2": 9, "y2": 2 } }, { "pos": 6, "descr": "Central Bar left", "mainFrame": false, "defaultWidget": null, "sketch": { "x1": 2, "y1": 3, "x2": 4, "y2": 3 } }, { "pos": 7, "descr": "Central Bar Center", "mainFrame": false, "defaultWidget": null, "sketch": { "x1": 5, "y1": 3, "x2": 7, "y2": 3 } }, { "pos": 8, "descr": "Central Bar right", "mainFrame": false, "defaultWidget": null, "sketch": { "x1": 8, "y1": 3, "x2": 9, "y2": 3 } }, { "pos": 9, "descr": "Banner Advisor", "mainFrame": false, "defaultWidget": null, "sketch": { "x1": 0, "y1": 4, "x2": 11, "y2": 4 } }, { "pos": 10, "descr": "Full", "mainFrame": false, "defaultWidget": null, "sketch": { "x1": 0, "y1": 5, "x2": 11, "y2": 5 } }, { "pos": 11, "descr": "Footer Left", "mainFrame": false, "defaultWidget": null, "sketch": { "x1": 0, "y1": 6, "x2": 5, "y2": 6 } }, { "pos": 12, "descr": "Footer right", "mainFrame": false, "defaultWidget": null, "sketch": { "x1": 6, "y1": 6, "x2": 11, "y2": 6 } }, { "pos": 13, "descr": "Footer 2 Left", "mainFrame": false, "defaultWidget": null, "sketch": { "x1": 0, "y1": 7, "x2": 5, "y2": 7 } }, { "pos": 14, "descr": "Footer 2 right", "mainFrame": false, "defaultWidget": null, "sketch": { "x1": 6, "y1": 7, "x2": 11, "y2": 7 } } ] } -

In the Template field, enter XML formatting to determine where each defined frame belongs and how to render it. See the example content below:

<#assign wp=JspTaglibs["/aps-core"]> <#assign c=JspTaglibs["http://java.sun.com/jsp/jstl/core"]> <!DOCTYPE html> <html lang="en"> <head> <meta charset="utf-8" /> <title> <@wp.currentPage param="title" /> - <@wp.i18n key="PORTAL_TITLE" /> </title> <meta name="viewport" content="width=device-width, initial-scale=1.0" /> <meta name="description" content="" /> <meta name="author" content="" /> <link rel="icon" href="<@wp.info key="systemParam" paramName="applicationBaseURL" /> favicon.png" type="image/png" /> <!-- Le HTML5 shim, for IE6-8 support of HTML5 elements --> <!--[if lt IE 9]> <script src="<@wp.resourceURL />static/js/entando-misc-html5-essentials/html5shiv.js"></script> <![endif]--> <@c.import url="/WEB-INF/aps/jsp/models/inc/content_inline_editing.jsp" /> <@c.import url="/WEB-INF/aps/jsp/models/inc/header-inclusions_light.jsp" /> <style> .editableform .control-group { margin-bottom: 0; white-space: nowrap; line-height: 28px; } </style> </head> <body class="purple" data-spy="scroll" data-target="#navbar-menu"> <!-- Navbar --> <div class="navbar navbar-custom navbar-fixed-top sticky" role="navigation" id="sticky-nav"> <div class="container"> <!-- Navbar-header --> <div class="navbar-header"> <!-- Responsive menu button --> <button type="button" class="navbar-toggle" data-toggle="collapse" data-target=".navbar-collapse"> <span class="sr-only">Toggle navigation</span> <span class="icon-bar"></span> <span class="icon-bar"></span> <span class="icon-bar"></span> </button> <!-- LOGO --> <a class="navbar-brand logo" href="#"> <img alt="acme-logo" class="logo-img" src="<@wp.imgURL />Logo_Acme_Bank.png"> </a> </div> <!-- end navbar-header --> <!-- menu --> <div class="navbar-collapse collapse" id="navbar-menu"> <!--Navbar left--> <ul class="nav navbar-nav nav-custom-left"> <!--frame 0 1--> <@wp.show frame=0 /> <@wp.show frame=1 /> </ul> <!-- Navbar right --> <ul class="nav navbar-nav navbar-right"> <!--frame 2 3--> <@wp.show frame=2 /> <@wp.show frame=3 /> </ul> </div> <!--/Menu --> </div> <!-- end container --> </div> <!-- End navbar-custom --> <!-- HOME --> <section> <!--frame 4--> <@wp.show frame=4 /> <!--frame 4--> </section> <!-- END HOME --> <!-- Features Alt --> <section class="section" id="mortgage"> <div class="container"> <div class="row"> <div class="col-sm-12"> <!--frame 5--> <@wp.show frame=5 /> <!--frame 5--> </div> </div> </div> </section> <section class="section"> <div class="container"> <div class="col-sm-4"> <!--frame 6--> <@wp.show frame=6 /> <!--frame 5--> </div> <div class="col-sm-4"> <!--frame 7 frame bpm --> <@wp.show frame=7 /> <!--frame 7--> </div> <div class="col-sm-4"> <!--frame 8 frame bpm --> <@wp.show frame=8 /> <!--frame 8--> </div> </div> </section> <section> <!--frame 9--> <@wp.show frame=9 /> <!--frame 9--> </section> <section class=""> <!--frame 10--> <@wp.show frame=10 /> <!--frame 10--> </section> <section class=""> <div class="container"> <div class="col-md-12"> <div class="text-center"> <!--frame 11--> <@wp.show frame=11 /> </div> <!--frame 11--> </div> </div> </section> <!-- FOOTER --> <footer class=" "> <div class="container text-center"> <div class="row"> <div class="col-md-12"> <!--frame 12--> <@wp.show frame=12 /> <!--frame 12--> </div> </div> <!-- end row --> <div class="row"> <div class="col-sm-6"> <!--frame 13--> <@wp.show frame=13 /> <!--frame 13--> </div> <div class="col-sm-6"> <!--frame 14--> <@wp.show frame=14 /> <!--frame 14--> </div> </div> </div> </footer> <!-- END FOOTER --> <script src="<@wp.resourceURL />static/js/jquery.ajaxchimp.js"></script> <script src="<@wp.resourceURL />static/js/jquery.sticky.js"></script> <script src="<@wp.resourceURL />static/js/jquery.app.js"></script> </body> </html> -

When finished, click Save.

CREATE THE APPLICANT PAGE

-

From the tabs along the top, select Page Designer > Page Tree > Add.

-

Enter a page title and a code. The Code field will auto-populate with a value similar to the Title and acts as the page’s unique identifier. For example:

-

Title: Application Home

-

Code: application_home

-

-

From the Select Page Placement drop-down menu, select the page to act as this page’s hierarchical parent.

-

In the Page Groups section, select an Owner Group and a Join Group.

-

Owner Group members have administrative access to this page

-

Join Group members are able to see the page

-

-

In the Settings section, select the page model you just created from the Page Model drop-down menu. Once selected, set the options for the remaining options in the Settings section.

-

Click Save and Configure. This opens the Page Configuration screen.

ADD WIDGETS

-

On the Page Configuration screen, locate the Widgets section on the right side of the screen.

-

Search for the following widgets and drag them into the specified frame:

-

Choose a language purple/light and drag to Top Bar 4

-

Main banner purple and drag to Central Bar 1

-

Left 3 steps purple and drag to Central Bar Left

-

Right col help purple and drag to Central Bar Right

-

Banner advisor purple/light and drag to Banner Advisor

Click the Preview button to verify the appearance of the page before publishing.

-

-

When the widgets are in place and you are satisfied with the preview, click Publish.

| Only click Publish after confirming that each widget has been configured properly. |

INTEGRATE THE BPM FORM

-

From the tabs along the top, choose Integrations > Red Hat PAM Config > Add Integration.

-

In the Connection section, add all server information. For example:

-

General Settings section:

-

Active: On

-

(Optional) Debug: On

-

-

Connection section:

-

Name: My Application

-

Host name: <my.redhat.pamInstance.com>

-

Schema: http

-

Port: 8080

-

Webapp name: kie-server

-

Username: username for the process server administrator

-

Password: password for the process server administrator

-

Conn. Timeout (in millis): 500

-

-

-

Click Test Connection to verify a valid the configuration, then click Save.

-

Open the Page Tree by choosing the Page Designer tab > Page Tree.

-

Find your new page in the Page Tree and click its More Options button > Configure.

-

Search for the “BPM-form by Data Type” widget and drag it to the Central Bar Center frame.

-

Click the Preview button to verify the correct appearance.

-

Click Publish.

1.4.2. GETTING STARTED WITH ENTANDO AND PAM

Overview

This document provides an introduction to using the Entando App Builder integrated with Red Hat Process Application Manager. This template application provides a page with a task list, task details, case comments (optional), and case management widgets (optional). The provided application can then be extended and customized using the App Builder functionality.

Launch the Template Application

Unzip the provided project (entando-pam-app-5.0.3.zip)

-

On a command line cd into the application (for example cd entando-pam-demo-app)

-

Start the project with this command:

mvn jetty:run -Djetty.reload=manual -Djetty.port=8083 -Dspring.profiles.active=swagger

-

Notes on this command and the environment

-

mvn jetty:run — Run in jetty for quick start and development

-

jetty.reload=manual — to prevent jetty from reloading changes in your project

-

-Djetty.port=8083 — Change the default port to 8083. Without this jetty will start on 8080 and usually conflict with something else in the environment

-

-Dspring.profiles.active=swagger — Enable swagger on the project so we can look at the APIs and API documentation. Disabled by default. This can also be exported as an environment variable: export spring_profiles_active=swagger

-

Wait for the project to start. Look for: Started Jetty Server. Console Reloading is Enabled

-

In a browser navigate to: http://localhost:8083/entando-pam-app-5.0.3

-

-

Add the KIE server configuration

-

Navigate to http://localhost:8083/entando-pam-app-5.0.3/do/login

-

Login with

-

User: admin

-

Password: adminadmin

-

-

On the left pane select Integrations → Components → Red Hat PAM Config

-

In the upper right select Add Configuration

-

Name - Enter the name of your choice for the configuration

-

Hostname - The host where your KIE server is running. For example (localhost). Do not include http:// or ports

-

Schema: One of http or https depending on where your KIE server is running

-

Port - Enter the port of the http interface of your KIE server. If any. Can be left blank. For example 8080 is common if KIE is running locally

-

WebApp Name - The value that comes after the port in your KIE URL. If any. Can be left blank. A common value is kie-server when running KIE locally

-

Username - PAM Admin username. Common value is pamAdmin

-

Password - The password for the KIE user

-

Conn. Timeout (in millis) - Millisecond timeout for connecting to KIE. Can be blank

-

-

Hit Test connection and you’ll get a green banner if the connection is valid

-

Select Save (don’t forget this)

- Configure the Widgets to Use your PAM Connection

The demo app comes with a collection of widgets pre-applied in the pages but they need to be configured to use the new KIE configuration created in Part 2 above.

-

In the left nave select Page Designer → Page Tree

-

In the page tree in the kebab menu on the right select the menu for the page named Task List

-

Select Configure

-

On this page the widget in the middle of the screen called PAM-Datatable Task List holds the task list for our application. Select the kebab menu on the right and select settings

-

On this page fill out the configuration for you new PAM instance

-

Knowledge Source - this will be the name of the PAM integration you selected and configured above

-

Process - The process you want to fetch tasks for

-

Open new page on table row click - Select ON

-

Show Claim button - Select ON

-

Show Complete Button - Select ON

-

Under BPM Groups - Select ON for all of the provided groups

-

Note: If modeling a process with different groups you need to add those groups to Entando under the left menu Setting → Groups and enter the names to match exactly the groups used in PAM

-

-

Override Fields

-

These are the columns that will show up in your table. They are fetched from Task and Process variables. You can provide a different column header under Override fields and remove columns using the check boxes

-

-

-

Select Save at the bottom of the screen

-

Hit the green Publish button at the top of the page

-

In the upper right select Pages

-

On the row that says task-details click on the row and then on the right and select the kebab button

-

Select configure

-

Select the kebab button on the widget labeled PAM-Task Form by Data Type

-

Select settings

-

Select the Knowledge Source and Process

-

Click Save

-

Click the green publish button

-

Optional step

-

If the application you are building is using Case Management functionality you can also add the PAM Case Comments widget to the box labeled Widget 4 by searching for the widget on the right and dragging it to the page. Then configure that widget with the Knowledge Source and Process

-

Click Save and Publish

-

1.5. CONTENT MANAGEMENT SYSTEM

1.5.1. INTRODUCTION

Access Entando’s Content Management System features from the left pane by selecting APPS > CMS. The CMS modules contain functions that let you manage contents, their type, model, and digital assets. This section provides an overview of the tasks associated with each of the CMS subsections, which are not necessarily arranged sequentially for a first-time setup.

-

Contents

-

Digital Assets

-

Content Types

-

Content Models

-

Content Settings

Generally speaking, the flow for creating content is: Create a Content Type > Create a Content Model for the newly created Type > Create Contents and Digital Assets > Publish the contents

DEFINING CONTENT MANAGEMENT SECTIONS

- Contents

-

Contents are the human consumable pieces of information presented in your applications. Newspaper articles, blog posts, press releases, and their associated images and attachments. The Contents section is where the management of your content happens as well as a search utility for quickly accessing your assets.

- Digital Assets

-

Digital Assets are the rich media files you can use to provide a more robust experience in your application, including photos, audio and video files, and documentation. By using the Digital Assets section, you create a repository from which you can access any asset with minimal effort, can repurpose it in multiple locations, and specify user and management rights to that asset.

The Entando platform divides Digital Assets into two broad categories: Images (jpg/jpeg and png files) and Attachments (all other file formats).

- Content Types

-

Entando’s Content Types define the different content elements in your repository and enable you to manage settings for each category in centralized, reusable way. Each type defines the unique attributes associated with that given type through a series of attributes.

- Content Models

-

Content Models are templates for your information. They determine a structure for how the content is organized, similar to file templates in Microsoft Word or PowerPoint.

- Content Settings

-

Content Settings provide a method for managing aspects of your Digital Assets such as metadata as well as activating or deactivating Entando’s WYSIWYG editor called CKEditor.

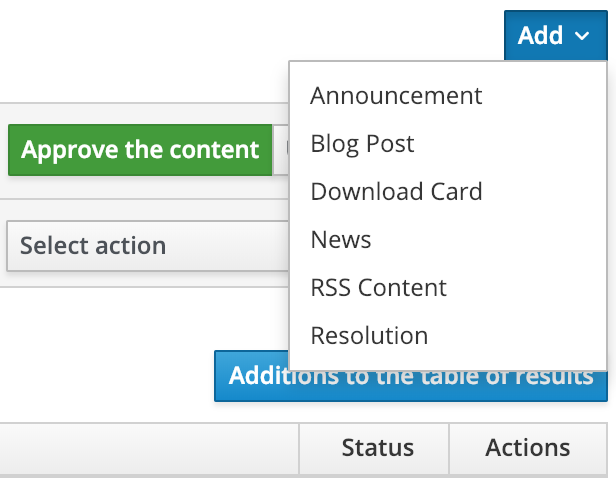

1.5.2. CONTENTS SECTION

-

Click the Add button and select the Content Type to create.

-

Populate each field with the requisite information. See Creating a New Page for specifics on Owner Groups and Join Groups. When each field is complete, click Save.

-

Once created, you content appears in the table of results at the bottom of the Content List page. You can edit or copy and paste the content through the menu under the Actions column.

You must publish your content in the CMS before you can display it in an application, and you can unpublish it to remove it from display. To publish or unpublish content:

-

Select the checkbox next to the requisite content item. You may select multiple content items at once.

-

Above the content table, click either the Approve the content or Unpublish button.

ADDING IMAGES AND TAGS

You can add images from the Digital Assets repository to content. When you do, the image comes with five image tags:

-

Text

-

Alt

-

Description

-

Legend

-

Title

You have the option to manually assign values to these tags or automatically populate them with metadata values.

-

Add a new content or select a content from the table and click Actions > Edit.

-

Click the Picture button and select the Digital Asset to include.

-

Populate the image tags with values and then click Save.

-

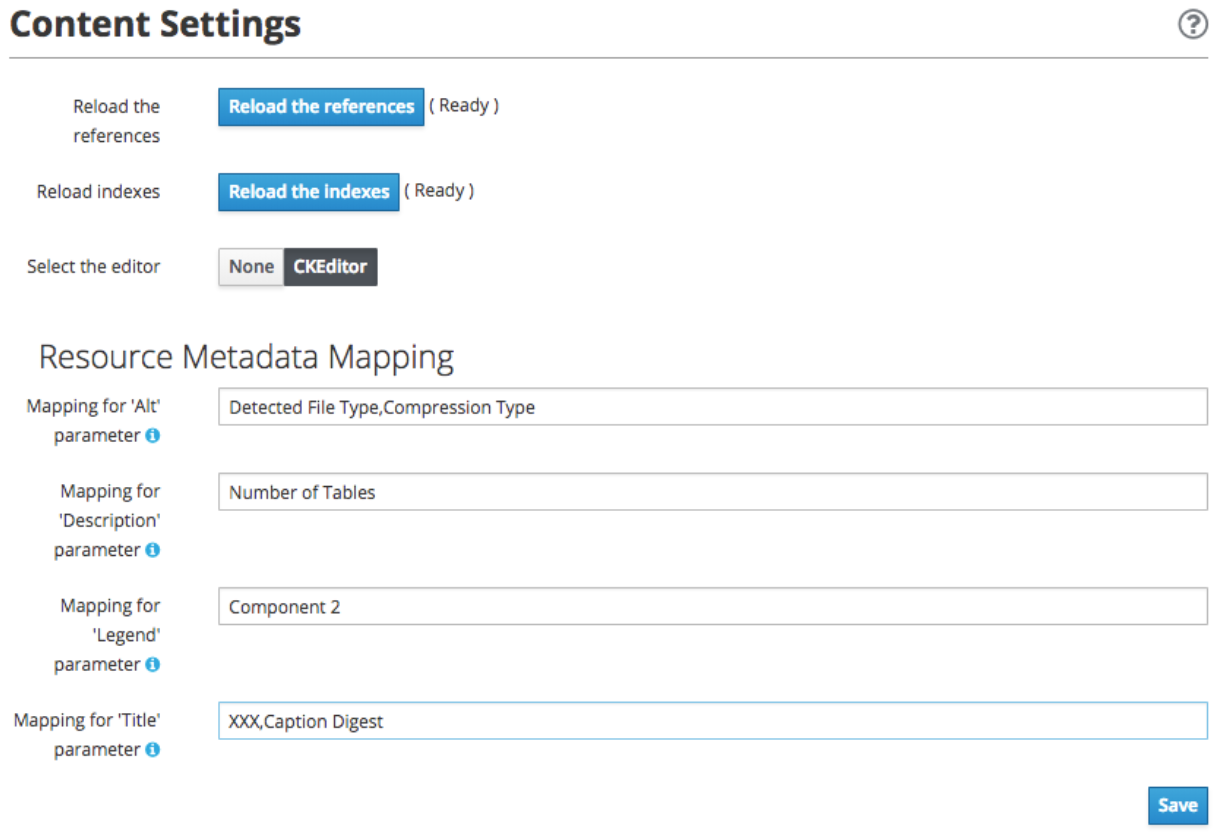

Select Apps > CMS > Content Settings.

-

In the Resource Metadata Mapping section, populate any of the fields with the value in the Name column of the Metadata table. You can add multiple values by separating them with commas.

For example, enter “Detected File Type,Compression Type” in the Alt field to instruct Entando to use those values in the image’s Alt tag.

1.5.3. DIGITAL ASSETS

The Digital Assets section separates content into Images and Attachments via tabs along the top of the Digital Assets home screen.

-

Choose the Images or Attachments tab at the top of the screen, depending on the asset to upload, and click Add.

-

Select a Group and home category, and name the asset.

-

Click the Choose File button to select the file to add to the CMS.

-

Click Done. The asset appears in the asset list at the bottom of the List of Images or Attachments screen.

EDITING ASSETS

Once you have a digital asset in place, you have several editing and viewing options. Click the asset’s More Actions button and select "Edit" to update the asset’s name, location, and the file represented.

WORKING WITH AN ASSET’S METADATA

Starting with Entando 4.3.4, the platform has captured metadata for every image you have uploaded. View the metadata from the Images tab of the Digital Assets screen by clicking an asset’s Actions menu > Edit. The metadata appears in a table at the bottom of the screen.

See the Adding Images and Tags section for details on using image metadata in contents.

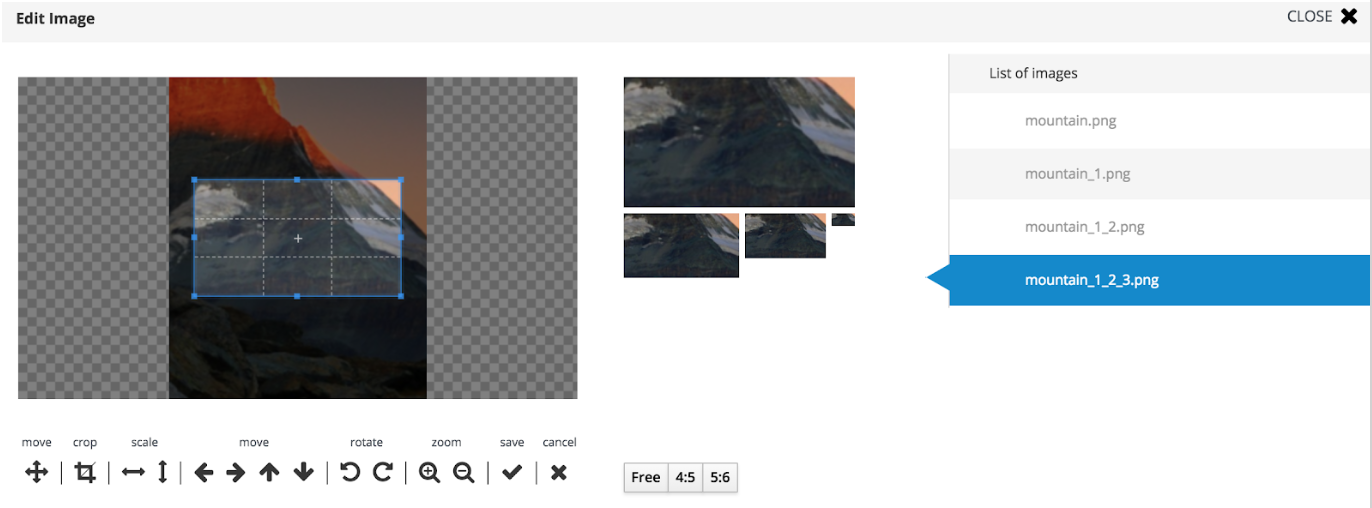

CROPPING IMAGES

Beginning with Entando 4.3.5, you can crop images newly uploaded via Digital Assets. You can either crop the image dynamically or by using pre-configured crop ratios.

-

Select Apps > CMS > Content Settings.

-

Under “Settings Image Crop Dimensions,” click Add.

-

Enter a crop ratio, using numeric values separated by a colon. See the screenshot below for an example.

-

Click Save.

-

Uploading an image to digital assets opens the Edit Image screen with tools to crop the image.

-

Buttons representing your pre-defined crop ratios are below the image. Clicking any of those buttons changes the selected crop area based on the chosen ratio.

-

You can create multiple crops of a single image as part of the upload process. The right side of the screen displays the list of crops created during the current session.

-

-

Use your mouse or the buttons below the image to move or change the crop area, then click Save to crop the selected area. Repeat the process as many times as needed. Each new crop of the image appears under the List of Images to the right of the screen.

-

Click Close to return to the Add Digital Assets screen. Notice that each individual crop of the image now has its own row in the list of resources, as well as a More Actions button you can use to edit or delete the image.

-

Click Done to exit the Edit screen.

1.5.4. CONTENT TYPES

Content Types determine the fields that differentiate one piece of content, such as a blog post, from another, like an employee profile.

-

Navigate to APPS > CMS > Content Types and click the Add button.

-

Assign a Code for the type and then a Name, again, such as blog post or employee profile.

-

In the Attributes section, select from the Type drop-down menu an attribute that is necessary to include with this type of content. For example, a blog post would require fields such as Author, Title (text), Timestamp, and Picture (Image). Click the Add button to add the attribute to your content type.

-

In the Add Attribute screen, enter code and name values for this attribute. The remaining fields on the screen are optional. When finished, click Continue to return to the Add screen.

-

Add as many additional attributes as necessary and then click Save. The content type will appear in the table at the top of the Content Types screen.

To edit a content type, click the Actions menu in-line with a content type and select "Edit."

1.5.5. CONTENT MODELS

Content models determine how the CMS arranges your content. The Model section defines the structural layout while any stylesheet that you attach handles the design elements like font, color, and link styles.

-

Navigate to APPS > CMS > Content Models and click the Add button.

-

Select the Content Type that this model applies to, then enter identifiers in the Name and Code fields. Selecting the Content Type helps the Inline Editing feature suggest attributes that are available to arrange. For example, if your Blog Post content type contains attributes for Title, Picture, and Caption, the Inline Editor will be able to suggest those attributes as you enter code into the Model field.

-

Enter code into the Model field. Entando uses Apache Velocity as its template engine to reference objects in Java code. If you’ve selected a Content Type, the Inline Editing Assist will format the code for easier reading.

Hint: Press CTRL + SPACE to open Content Assist.

1.5.6. CONTENT SETTINGS

The Content Settings section is for fine tuning contents that you have added from the Digital Assets section. You can perform tasks such as autopopulating or excluding metadata for images, or adding and removing metadata fields in the Content Settings section.

AUTOPOPULATING IMAGE TAGS

You can also manually adjust metadata for an image in the Contents section.

-

Select Apps > CMS > Content Settings.

-

In the Resource Metadata Mapping section, populate any of the fields with the value in the Name column of the Metadata table. You can add multiple values by separating them with commas.

For example, enter “Detected File Type,Compression Type” in the Alt field to instruct Entando to use those values in the image’s alt tag.

EXCLUDING METADATA

You can exclude metadata fields by name by setting the following property in systemParams.properties:

jacms.imgMetadata.ignoreKeys

For example:

jacms.imgMetadata.ignoreKeys=Blue TRC,Red TRC,Green TRC

ADDING AND REMOVING METADATA FIELDS

You can customize the metadata fields displayed in the Resource Metadata Mapping section. By default, the values are Alt, Description, Legend, and Title, but you can add and remove fields.

-

Enter values in the Key and Mapping fields in line with the “Add metadata” field. The Key field represents the metadata’s name (e.g., Alt or Title). The Mapping field defines the value that populates the field.

-

Click Add. You can add as many new metadata fields as necessary.

-

Click the Delete button in line with the field to remove.

You do not receive a warning before deleting the field and it is not recoverable.

1.6. ENTANDO PWA

Progressive Web Apps (PWA) is the new way to deploy your apps into different platforms without any hassle. Whether you use it as web or even a native-feel app, PWA is one of the great, efficient solution that you can deploy your app in no time.

So we took this technology as an advantage to bring you Entando PWA which provides you content-driven solutions and deploy in less time.

1.6.1. DEFINING THE STRUCTURE

The system has two main instances,

-

PWA Provider - Entando core instance that basically manages your content with the use of CMS (Content Management System).

-

PWA Shell - This is the interface-level instance when you can deploy and view your contents from your PWA Provider.

1.6.2. INSTALLATION

This will provide step-by-step installation on launching your very first Entando PWA using your local machine.

PREREQUISITES

Ensure that you have installed (mvn install) both Entando Core and Entando Components.

For database requirements, you need to make sure that you have a running DBMS (Postgres or MySQL) ready for installation.

GIT CLONE

Enter this in your command line: git clone https://github.com/entando/entando-pwa.git

After cloning, you will notice that there are two folders inside entando-pwa which are "pwa-provider" and "pwa-shell". Go inside "pwa-provider" and follow the next steps.

PROVIDER INSTANCE SETUP

This section will give you a quick guide on installation and running the provider instance.

1. CREATING DATABASE

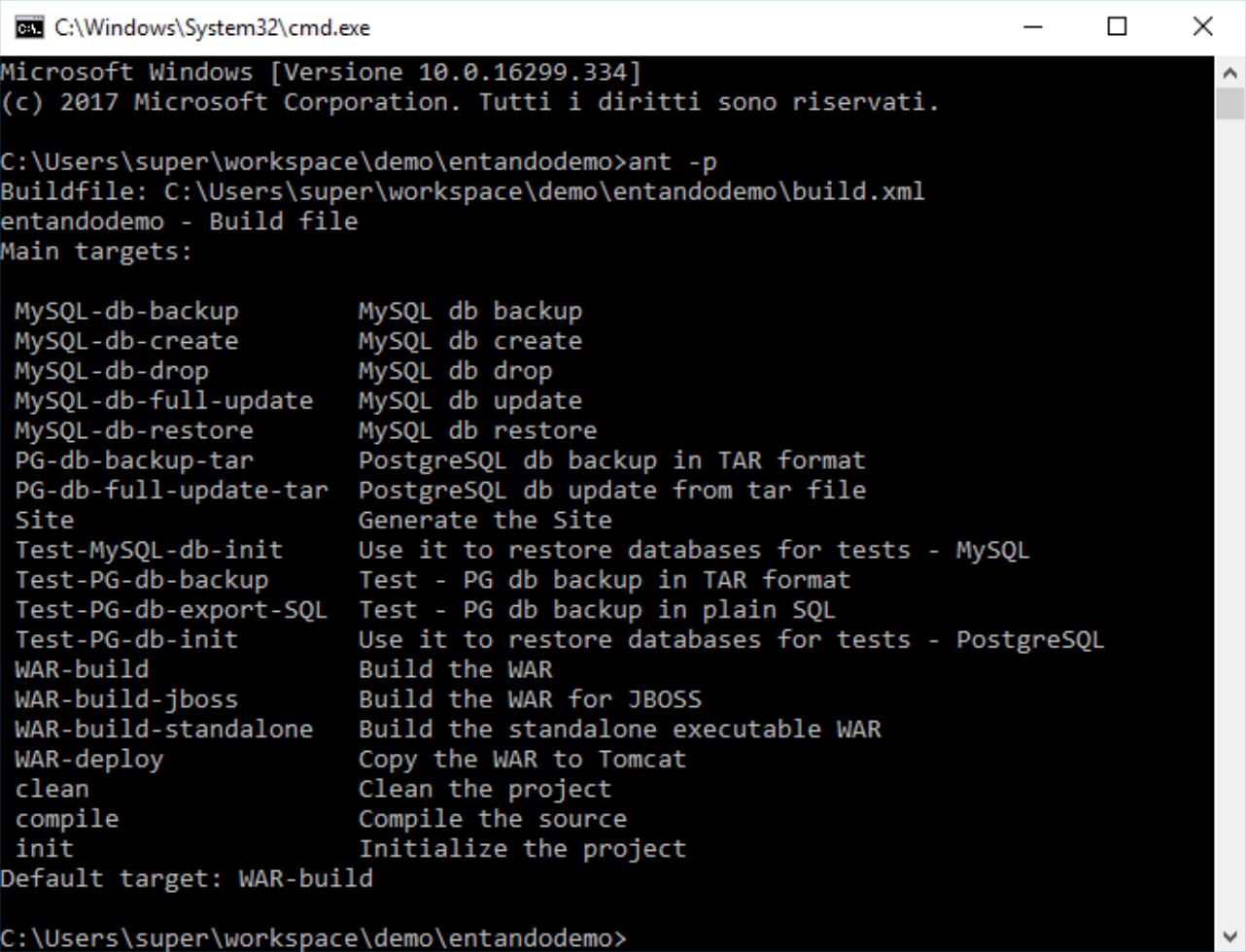

PWA provider includes Ant tasks for you to use first-hand without the need to setup database manually. Refer to the Developers' guide on configuring Ant and executing afterwards. In default, this system is being setup on a Postgres database environment, so make sure you indicated your preferred database by modifying it in "pwa-provider/buildproperties.xml".

For the sake of this procedure (and a possibility that you may have installed PostgreSQL), you can try running ant PG-db-create under "pwa-provider" to install the postgres database.

2. SETUP PWA PROVIDER SYSTEM PARAMETERS

This has been configured to use Keycloak Authentication, but in this guide, we will be using the default Entando authentication included in the provider instance. So to make sure you have configured the authentication type properly, you need to check on "pwa-provider/src/main/config/systemParams.properties" and see at the end of the line configuration that the keycloak mode is not enabled. Check the line with keycloak.enabled has been set to false.

3. RUNNING THE INSTANCE

Using the console, and still under "pwa-provider" folder, just simply enter this command line: mvn jetty:run to launch the PWA Provider instance. First time launching the instance can take a little more time because of dependency installations.

When you see these lines in the console:

[INFO] Started ServerConnector@23746f63{HTTP/1.1,[http/1.1]}{0.0.0.0:8080}

[INFO] Started @80840ms

[INFO] Started Jetty ServerThis means your provider instance is ready. You can access this with the URL http://localhost:8080/pwa-provider using your web browser. This is basically the same interface from your Entando Core Instance. Please refer to CMS on setting up and publishing your content.

Now this guarantees that your provider is up and running, it’s now time to setup your PWA-powered web app!

PWA INSTANCE SETUP

This section will provide you quick steps on installing your Entando-powered Progressive Web App.

1. INSTALL NPM DEPENDENCIES